In the tenth part of this tutorial series on developing PHP on Docker we will

create a production infrastructure for a dockerized PHP application on GCP using multiple

VMs and managed services for redis and mysql.

mysql and

redis we'll use GCP managed products. You'll learn how to create the corresponding infrastructure on GCP via the UI as well as through the

gcloud cli.

All code samples are publicly available in my

Docker PHP Tutorial repository on Github.

You find the branch with the final result of this tutorial at

part-10-create-production-infrastructure-php-app-gcp.

CAUTION: With this codebase it is not possible to deploy any longer! Please refer to the next part Deploy dockerized PHP Apps to production - using multiple VMS and managed mysql and redis instances from GCP for the code to enable deployments again.

All published parts of the Docker PHP Tutorial are collected under a dedicated page at Docker PHP Tutorial. The previous part was Deploy dockerized PHP Apps to production on GCP via docker compose as a POC.

If you want to follow along, please subscribe to the RSS feed or via email to get automatic notifications when the next part comes out :)

Table of contents

Introduction

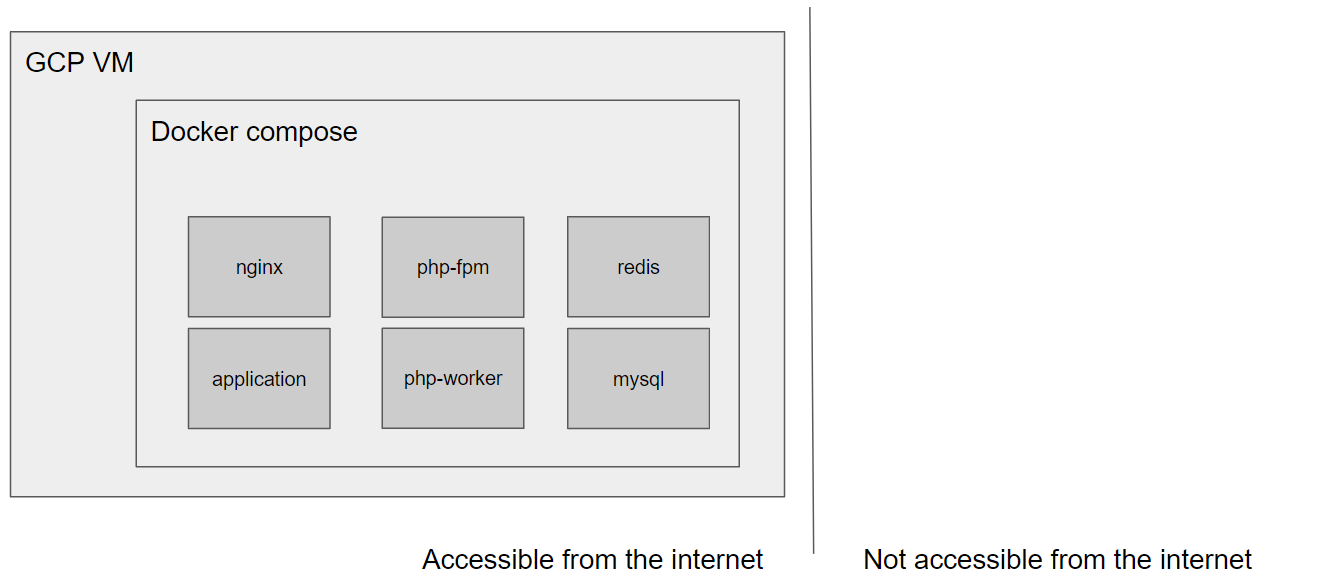

In Deploy dockerized PHP Apps to production on GCP via docker compose as a POC

we've created a single Compute Instance VM, provisioned it with docker compose and

ran our full docker compose setup on it. In other words: All containers ran on the same VM

(that had to be reachable from the internet).

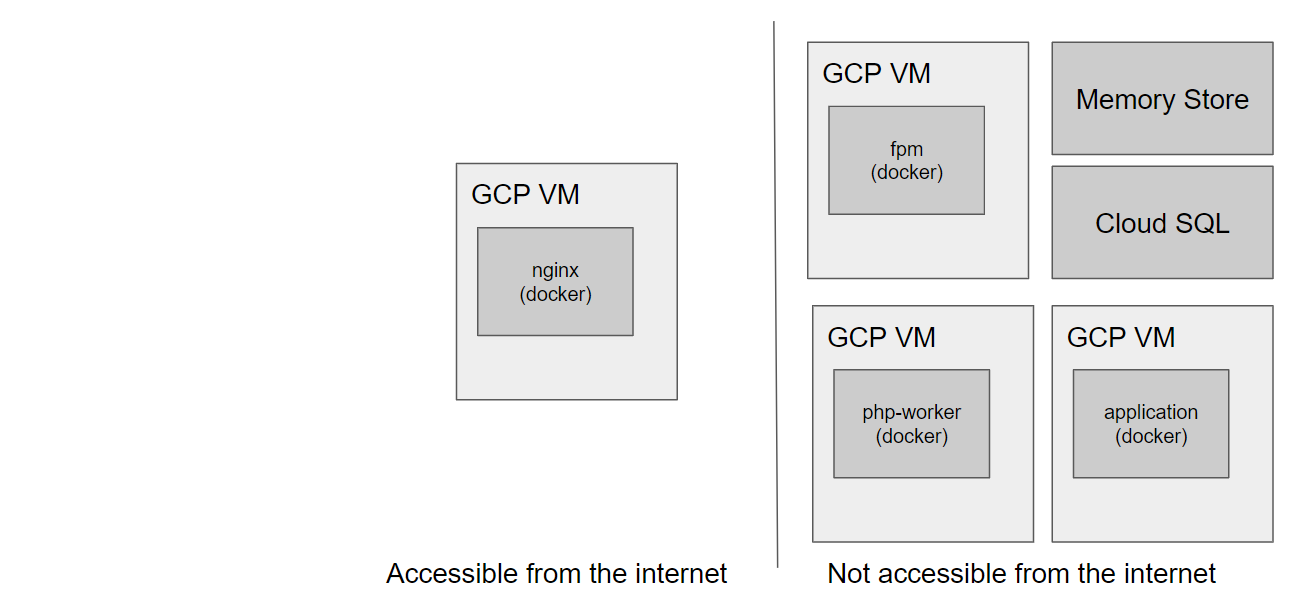

In this part we want to split this setup up so that:

- each

dockercontainer runs on its own VM redisandmysqlwon't run indockercontainers but as the managed GCP products MySQL Cloud SQL for MySQL and Redis Memorystore

In addition, we want to expose only the nginx service to the internet - all other VMs should

communicate via private IP addresses.

Run the code yourself

I recommend creating a completely new GCP project to have a "clean slate" that ensures that everything works out of the box as intended.

# Prepare the codebase

git clone https://github.com/paslandau/docker-php-tutorial.git && cd docker-php-tutorial

git checkout part-10-create-production-infrastructure-php-app-gcp

# Run the initialization.

make dev-init

# Note:

# You don't have to follow the additional instructions of the `dev-init` target

# for this part of the tutorial.

# The following steps need to be done manually:

#

# - Create a new GCP project and "master" service account with Owner permissions.

# - Create a key file for that master service account and place it in the root of the codebase at

# ./gcp-master-service-account-key.json

#

# @see https://www.pascallandau.com/blog/gcp-compute-instance-vm-docker/#preconditions-project-and-owner-service-account

ls ./gcp-master-service-account-key.json

# Should NOT fail with

# ls: cannot access './gcp-master-service-account-key.json': No such file or directory

# Update the variables `DOCKER_REGISTRY` and `GCP_PROJECT_ID` in `.make/variables.env`

projectId="SET YOUR GCP_PROJECT_ID HERE"

# CAUTION: Mac users might need to use `sed -i '' -e` instead of `sed -i`!

# @see https://stackoverflow.com/a/19457213/413531

sed -i "s/DOCKER_REGISTRY=.*/DOCKER_REGISTRY=gcr.io\/${projectId}/" .make/variables.env

sed -i "s/GCP_PROJECT_ID=.*/GCP_PROJECT_ID=${projectId}/" .make/variables.env

make docker-build

make docker-up

make gpg-init

make secret-decrypt

# Then run

make infrastructure-setup ROOT_PASSWORD="production_secret_mysql_root_password"

# FYI: This step can take 15 to 30 minutes to complete.

# Verify the setup

make infrastructure-info

Should show something like this

$ make infrastructure-info

NAME ZONE MACHINE_TYPE PREEMPTIBLE INTERNAL_IP EXTERNAL_IP STATUS

application-vm us-central1-a e2-micro 10.128.0.5 RUNNING

nginx-vm us-central1-a e2-micro 10.128.0.3 34.134.120.87 RUNNING

php-fpm-vm us-central1-a e2-micro 10.128.0.2 RUNNING

php-worker-vm us-central1-a e2-micro 10.128.0.4 RUNNING

INSTANCE_NAME VERSION REGION TIER SIZE_GB HOST PORT NETWORK RESERVED_IP STATUS CREATE_TIME

redis-vm REDIS_6_X us-central1 BASIC 1 10.137.150.67 6379 default 10.137.150.64/29 READY 2022-09-12T11:22:14

NAME DATABASE_VERSION LOCATION TIER PRIMARY_ADDRESS PRIVATE_ADDRESS STATUS

mysql-vm MYSQL_8_0 us-central1-b db-custom-1-3840 - 10.111.0.3 RUNNABLE

The following video shows the full process (excluding most of the waiting time)

Additional GCP concepts

IPs, Networking and VPCs (Virtual Private Cloud)

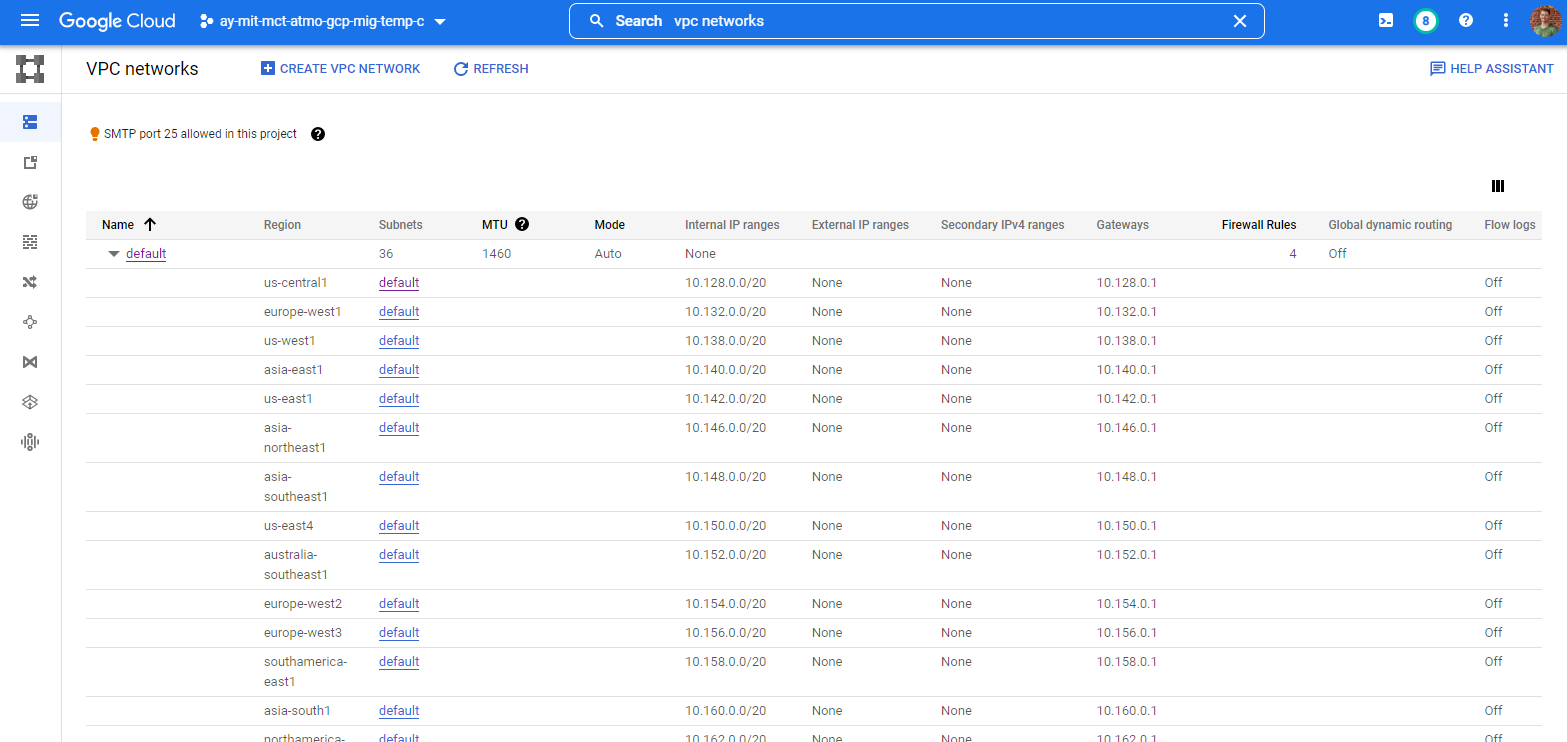

In order to restrict access to VMs from the internet, we need to ensure that GCP does not assign them a public IP address. They still need to be able to communicate with each other though and thus need a private IP address within the same network / VPC (Virtual Private Cloud).

So far, we didn't need to think about that at all, because

GCP creates a default network (a

so-called auto mode VPC network) called default for each project, and we have simply used it

as-is. But there are

a number of reasons why not using the default network is a good idea,

e.g.

- unnecessary subnetworks (one for each region - we'll only need one region for our setup)

- overly permissive firewall rules (e.g. for port 3389 for the Microsoft Remote Desktop Protocol [RDP].)

But for now the default network is "good enough", because it's not per-se insecure (as in

"nobody from the outside world has access to it"), and we'll tackle this and some other security

hardening measures in a later tutorial.

You can find the default network in the

VPC networks UI

Routers and NATs

Using only private IP addresses comes with a non-obvious caveat: Compute Instance VMs cannot only no longer be reached from the internet but also not reach the internet any longer themselves as per GCP docs:

By default, when a Compute Engine VM lacks an external IP address assigned to its network interface, it can only send packets to other internal IP address destinations.

This is a problem for a number of reasons, e.g.

- you can't install new software on the VM (e.g.

apt-getwill fail) - it's impossible to retrieve

dockerimages from the registry - the application itself might need to make HTTP requests to public APIs

Fortunately, there is a simple solution: NAT. NAT is short for Network Address Translation and is basically happening for any private internet access at home. Your router "translates" the internal IP address of your local machine to a public one, so that the response packets can be routed back to the router and from there to your local machine.

GCP offers the same functionality via Cloud NAT.

(Source)

We must first create a Cloud Router that serves as the control plane for Cloud NAT. The creation process is explained in the GCP Cloud Router Guide: Create a Cloud Router. Please note, that for Cloud NAT routers it's not necessary to assign ASN numbers:

Note: Cloud NAT does not use ASN information. Cloud NAT gateways can be connected to Cloud Routers that have any ASN or that have no ASN specified.

Once the Cloud Router exists, we can create a Cloud NAT following the steps outlined in the GCP Cloud NAT Guide: Set up and manage network address translation with Cloud NAT.

The following video explains the full process via the Cloud Console UI by showing that a Compute

Instance VM without external IP address cannot reach http://example.com/ unless a Cloud NAT is

created for the same network.

Creating a Cloud Router and a Cloud NAT gateway can also be done via gcloud cli. I've added

the following commands to .infrastructure/setup-gcp.sh

region=us-central1

router_name=default-router

nat_name=default-nat-gateway

network="default"

gcloud compute routers create "${router_name}" \

--region="${region}" \

--network="${network}"

gcloud compute routers nats create "${nat_name}" \

--router="${router_name}" \

--router-region="${region}" \

--auto-allocate-nat-external-ips \

--nat-all-subnet-ip-ranges

gcloud cli docs:

IP range allocations, VPC Peering and private service access

Since we will be using managed services for mysql and redis, we need to create a so-called

VPC peering with the Google Cloud Platform Service Producer. This is necessary, because

GCP will create a dedicated VPCs for MySQL Cloud SQL and Redis Memorystore instances. See

also the GCP VPC Guides:

Since we don't want to assign public IPs, we need to "peer"

our default VPC with those dedicated

service VPCs. I have explained this in more detail in

my MySQL Cloud SQL article for connecting via private IP.

In short:

- we must allocate a certain range of IPs in the

defaultnetwork - this range is then assigned to the VPC peering for Google Cloud Platform Services

- in consequence, the

redisandmysqlinstances will receive an IP address from the allocated IP range and are thus "visible" for all other VMs in thedefaultnetwork

See also the Example in the MySQL Guide: Learn about using private IP:

This video shows the full procedure to create an IP range allocation and a VPC peering to enable private access on a MySQL Cloud SQL instance:

Creating an IP range allocation and a VPC peering can also be done via gcloud cli. I've added

the following commands to .infrastructure/setup-gcp.sh

network="default"

private_vpc_range_name="google-managed-services-vpc-allocation"

ip_range_network_address="10.111.0.0"

gcloud compute addresses create "${private_vpc_range_name}" \

--global \

--purpose=VPC_PEERING \

--prefix-length=16 \

--description="Peering range for Google" \

--network="${network}" \

--addresses="${ip_range_network_address}"

gcloud services vpc-peerings connect \

--service=servicenetworking.googleapis.com \

--ranges="${private_vpc_range_name}" \

--network="${network}"

gcloud cli docs:

Additional Service APIs and IAM roles

Since we are using even more GCP services as before, we need to enable the corresponding APIs as well. Those are:

- servicenetworking.googleapis.com

- sqladmin.googleapis.com

- required for the Cloud SQL for MySQL setup and management

- redis.googleapis.com

- required for Redis Memorystore setup and management

As part of the deployment process in the next part of the tutorial

Deploy dockerized PHP Apps to production - using multiple VMS and managed mysql and redis instances from GCP,

we need to retrieve the IP addresses of all our services

(see Poor man's DNS via --add-host)

via the gcloud cli. This requires some additional

IAM roles / permissions

for the

deployment service account:

- cloudsql.viewer

- required to retrieve meta data from MySQL Cloud SQL instances

- redis.viewer

- required to retrieve meta data from Redis Memorystore instances

- compute.viewer

- required to retrieve meta data from Compute Instance VMs

I have added the APIs and roles also in the .infrastructure/setup-gcp.sh script:

project_id=$1

deployment_service_account_id=deployment

deployment_service_account_mail="${deployment_service_account_id}@${project_id}.iam.gserviceaccount.com"

gcloud services enable \

containerregistry.googleapis.com \

secretmanager.googleapis.com \

compute.googleapis.com \

iam.googleapis.com \

storage.googleapis.com \

cloudresourcemanager.googleapis.com \

sqladmin.googleapis.com \

redis.googleapis.com \

servicenetworking.googleapis.com

roles="storage.admin secretmanager.admin compute.admin iam.serviceAccountUser iap.tunnelResourceAccessor cloudsql.viewer redis.viewer compute.viewer"

for role in $roles; do

gcloud projects add-iam-policy-binding "${project_id}" --member=serviceAccount:"${deployment_service_account_mail}" "--role=roles/${role}"

done;

Service setup

Cloud SQL for MySQL setup

Please refer to How to use GCP MySQL Cloud SQL instances - from creation over connection to deletion for a general introduction into Cloud SQL for MySQL.

For this tutorial, we will create a mysql instance with the following settings:

- 1 CPU

- 3840 MB RAM

- Private Service Access / private IP only

- Enabled deletion protection

We'll also set the password of the root user to the password defined in the

DB_PASSWORD variable

in .secrets/prod/app.env

and create an application database named

application_db

I adapted the gcloud setup from

How to use GCP MySQL Cloud SQL instances: "Using the gcloud CLI

to create the script .infrastructure/setup-mysql.sh that will

create a mysql instance and uses the following commands

project_id=$1

mysql_instance_name=$2

root_password=$3

region=us-central1

master_service_account_key_location=./gcp-master-service-account-key.json

memory="3840MB"

cpus=1

version="MYSQL_8_0"

default_database="application_db"

network="default"

private_vpc_range_name="google-managed-services-vpc-allocation"

gcloud beta sql instances create "${mysql_instance_name}" \

--database-version="${version}" \

--cpu="${cpus}" \

--memory="${memory}" \

--region="${region}" \

--network="${network}" \

--deletion-protection \

--no-assign-ip \

--allocated-ip-range-name="${private_vpc_range_name}"

gcloud sql users set-password root \

--host=% \

--instance "${mysql_instance_name}" \

--password "${root_password}"

gcloud sql databases create "${default_database}" \

--instance="${mysql_instance_name}" \

--charset=utf8mb4 \

--collation=utf8mb4_unicode_ci

A corresponding make infrastructure-setup-mysql target is defined in .make/04-00-infrastructure.mk

.PHONY: infrastructure-setup-mysql

infrastructure-setup-mysql: ## Set the mysql instance up. The ROOT_PASSWORD variable is required to defined the password for the root user

@$(if $(ROOT_PASSWORD),,$(error "ROOT_PASSWORD is undefined"))

bash .infrastructure/setup-mysql.sh $(GCP_PROJECT_ID) $(VM_NAME_MYSQL) $(ROOT_PASSWORD) $(ARGS)

CAUTION: We use MYSQL_8_0 as version for the instance and should ensure to keep this in

sync with the value of the MYSQL_VERSION variable in the

.docker/.env file (see also

Structuring the Docker setup for PHP Projects .env.example and docker-compose.yml),

so that we use the same version in the docker compose for our local and ci setup and keep

parity between the environments.

Redis Memorystore setup

Please refer to How to use GCP Redis Memorystore instances - from creation over connection to deletion for a general introduction into Redis Memorystore.

For this tutorial, we will create a redis instance with the following settings:

- 1 GiB RAM

- Private Service Access / private IP

- Enabled

AUTH

I adapted the gcloud setup from

How to use GCP Redis Memorystore instances: "Using the gcloud CLI

to create the script .infrastructure/setup-redis.sh that will

create a redis instance and uses the following commands

project_id=$1

redis_instance_name=$2

region=us-central1

size=1 # in GiB

network="default"

version="redis_6_x"

private_vpc_range_name="google-managed-services-vpc-allocation"

gcloud redis instances create "${redis_instance_name}" \

--size="${size}" \

--region="${region}" \

--network="${network}" \

--redis-version="${version}" \

--connect-mode=private-service-access \

--reserved-ip-range="${private_vpc_range_name}" \

--enable-auth \

-q

A corresponding make infrastructure-setup-redis target is defined in

.make/04-00-infrastructure.mk

.PHONY: infrastructure-setup-redis

infrastructure-setup-redis: ## Set the redis instance up

bash .infrastructure/setup-redis.sh $(GCP_PROJECT_ID) $(VM_NAME_REDIS) $(ARGS)

CAUTION: We use redis_6_x as version for the instance and should ensure to keep this in

sync with the value of the REDIS_VERSION variable in the

.docker/.env file (see also

Structuring the Docker setup for PHP Projects .env.example and docker-compose.yml),

so that we use the same version in the docker compose for our local and ci setup and keep

parity between the environments.

The AUTH string is created automatically,

i.e. we must retrieve it after the creation and update the value of the REDIS_PASSWORD

variable in the production .env file of the application at

.secrets/prod/app.env. I

have created a corresponding make target in .make/03-00-gcp.mk

.PHONY: gcp-get-redis-auth

gcp-get-redis-auth: ## Get the AUTH string of the Redis service

gcloud redis instances get-auth-string $(VM_NAME_REDIS) --project=$(GCP_PROJECT_ID) --region=$(GCP_REGION)

Please note, that you need to

activate the master service account

first, because retrieving the

AUTH string required the permission redis.instances.getAuthString that is by default only

available in role

roles/redis.admin.

Set up the VMs that run docker containers

We'll stick mostly with the same VM configuration as outlined in my

Run Docker on GCP Compute Instance VMs: Create a Compute Instance VM,

though we need to ensure that the VMs for the application containers application, php-fpm and

php-workers are not getting a public IP. This is achieved by adding the

--no-address

flag.

Note: Adding the flag seemed to have no effect at first. This was because I initially

used the

--network-interface

option to define all the network settings "as a single string". When this option is provided,

the --no-address has no effect, because --network-interface takes precedence and the default

value here is address, i.e. "provide a public IP address".

In other words: This doesn't work as expected

$ gcloud compute instances create test --zone="us-central1-a" --machine-type="f1-micro" \

--network-interface=network=default \

--no-address

Created [https://www.googleapis.com/compute/v1/projects/ay-mit-mct-atmo-gcp-mig-temp-c/zones/us-central1-a/instances/test].

NAME ZONE MACHINE_TYPE PREEMPTIBLE INTERNAL_IP EXTERNAL_IP STATUS

test us-central1-a f1-micro 10.128.0.6 34.170.88.99 RUNNING

# ==> Note the "EXTERNAL_IP"

This does:

$ gcloud compute instances create test --zone="us-central1-a" --machine-type="f1-micro" \

--network=default \

--no-address

Created [https://www.googleapis.com/compute/v1/projects/ay-mit-mct-atmo-gcp-mig-temp-c/zones/us-central1-a/instances/test].

NAME ZONE MACHINE_TYPE PREEMPTIBLE INTERNAL_IP EXTERNAL_IP STATUS

test us-central1-a f1-micro 10.128.0.7 RUNNING

# ==> Note that no "EXTERNAL_IP" is given

In addition, the http-server tag responsible for

assigning the firewall rule to allow http traffic

is also not necessary and must be omitted.

I've separated the script explained under

Run Docker on GCP Compute Instance VMs: Putting it all together

in the "general" GCP setup at .infrastructure/setup-gcp.sh and a script dedicated for creating

a Compute Instance VM at .infrastructure/setup-vm.sh via the following commands

project_id=$1

vm_name=$2

enable_public_access=$3

vm_zone=us-central1-a

master_service_account_key_location=./gcp-master-service-account-key.json

deployment_service_account_id=deployment

deployment_service_account_mail="${deployment_service_account_id}@${project_id}.iam.gserviceaccount.com"

network="default"

# By default, VMs should not get an external IP address

# @see https://cloud.google.com/sdk/gcloud/reference/compute/instances/create#--no-address

args_for_public_access="--no-address"

if [ -n "$enable_public_access" ]

then

# The only exception is the nginx image - that should also be available via http / port 80

args_for_public_access="--tags=http-server"

fi

gcloud compute instances create "${vm_name}" \

--project="${project_id}" \

--zone="${vm_zone}" \

--machine-type=e2-micro \

--network="${network}" \

--subnet=default \

--network-tier=PREMIUM \

--no-restart-on-failure \

--maintenance-policy=MIGRATE \

--provisioning-model=STANDARD \

--service-account="${deployment_service_account_mail}" \

--scopes=https://www.googleapis.com/auth/cloud-platform \

--create-disk=auto-delete=yes,boot=yes,device-name="${vm_name}",image=projects/debian-cloud/global/images/debian-11-bullseye-v20220822,mode=rw,size=10,type=projects/"${project_id}"/zones/"${vm_zone}"/diskTypes/pd-balanced \

--no-shielded-secure-boot \

--shielded-vtpm \

--shielded-integrity-monitoring \

--reservation-affinity=any $args_for_public_access

Note: If the script is invoked with a 3rd argument, we'll assume that it's for the nginx

service so that the --no-address flag is omitted and the --tags=http-server is added.

A corresponding "generic" make infrastructure-setup-vm target is defined in

.make/04-00-infrastructure.mk

.PHONY: infrastructure-setup-vm

infrastructure-setup-vm: ## Setup the VM specified via VM_NAME. Usage: make infrastructure-setup-vm VM_NAME=php-worker ARGS=""

@$(if $(VM_NAME),,$(error "VM_NAME is undefined"))

bash .infrastructure/setup-vm.sh $(GCP_PROJECT_ID) $(VM_NAME) $(ARGS)

In addition, there are dedicated targets for each service, e.g.

.PHONY: infrastructure-setup-vm-php-worker

infrastructure-setup-vm-php-worker:

"$(MAKE)" --no-print-directory infrastructure-setup-vm VM_NAME=$(VM_NAME_PHP_WORKER)

.PHONY: infrastructure-setup-vm-nginx

infrastructure-setup-vm-nginx:

"$(MAKE)" --no-print-directory infrastructure-setup-vm VM_NAME=$(VM_NAME_NGINX) ARGS="enable_public_access"

as well as a "combined" target to run the setup in parallel. See also section

Use make to execute infrastructure and deployment targets in parallel.

.PHONY: infrastructure-setup-all-vms

infrastructure-setup-all-vms: ## Setup all VMs

@printf "$(YELLOW)The setup runs in parallel but the output will only be visible once a process is fully finished (can take a couple of minutes)$(NO_COLOR)\n"

"$(MAKE)" -j --output-sync=target infrastructure-setup-vm-application \

infrastructure-setup-vm-php-fpm \

infrastructure-setup-vm-php-worker \

infrastructure-setup-vm-nginx \

infrastructure-setup-redis \

infrastructure-setup-mysql

See section Add additional make variables for the definition

of variables like $(VM_NAME_PHP_WORKER) and $(VM_NAME_NGINX).

Provisioning the VMs

The original provisioning script explained in

Run Docker on GCP Compute Instance VMs: Provisioning

located at .infrastructure/scripts/provision.sh stays almost as before, though we were able to

remove the docker-compose-plugin dependency as we won't need compose any longer on the

production VMs.

In addition, I added some more make targets in .make/04-00-infrastructure.mk to make the

provisioning easier

.PHONY: infrastructure-provision-vm

infrastructure-provision-vm: ## Provision the VM specified via VM_NAME. Usage: make infrastructure-provision-vm VM_NAME=php-worker ARGS=""

@$(if $(VM_NAME),,$(error "VM_NAME is undefined"))

bash .infrastructure/provision-vm.sh $(GCP_PROJECT_ID) $(VM_NAME) $(ARGS) \

&& printf "$(GREEN)Success at provisioning $(VM_NAME)$(NO_COLOR)\n" \

|| printf "$(RED)Failed provisioning $(VM_NAME)$(NO_COLOR)\n"

.PHONY: infrastructure-provision-vm-nginx

infrastructure-provision-vm-nginx:

"$(MAKE)" --no-print-directory infrastructure-provision-vm VM_NAME=$(VM_NAME_NGINX)

# ...

.PHONY: infrastructure-provision-all

infrastructure-provision-all: ## Provision all VMs

@printf "$(YELLOW)The provisioning runs in parallel but the output will only be visible once a process is fully finished (can take a couple of minutes)$(NO_COLOR)\n"

"$(MAKE)" -j --output-sync=target infrastructure-provision-vm-application \

infrastructure-provision-vm-php-fpm \

infrastructure-provision-vm-php-worker \

infrastructure-provision-vm-nginx

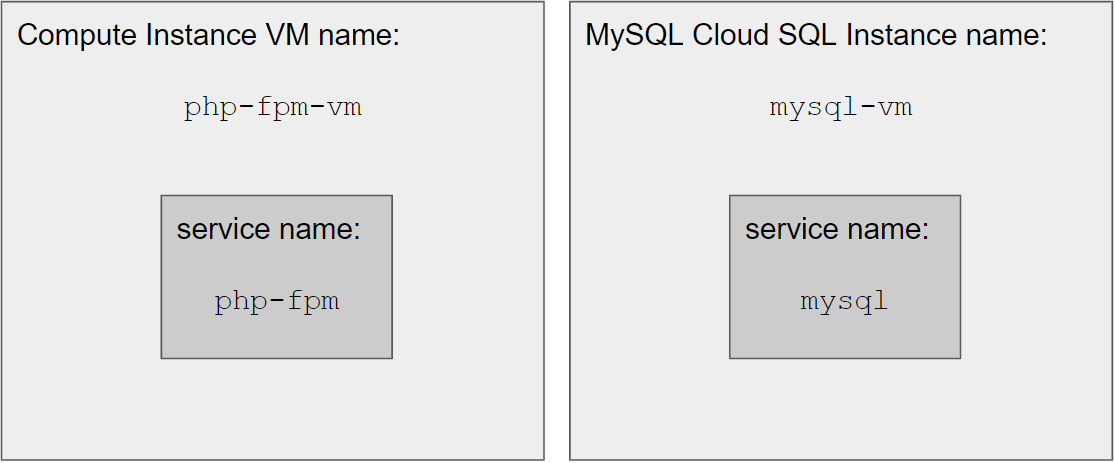

Mapping service names to VM names

In this tutorial I'm using the terms "service name" and "VM name" quite a lot. To avoid confusion, here is how I think about them:

service name

- is used in the sense of a

docker composeservice

A Service is an abstract definition of a computing resource within an application which can be scaled/replaced independently from other components. Services are backed by a set of containers, run by the platform according to replication requirements and placement constraints.

- our application has 6 services

(

nginx,php-fpm,application,php-worker,mysqlandredis) and so far each service was defined as an individualdockerimage and ran as a singledockercontainer - starting from this tutorial,

mysqlandredisare no longer used viadockerimages - services are a "logical" component - not an infrastructural one

- is used in the sense of a

- VM name

- a "service" can be run on a "VM"

- is a unique identifier for a Virtual Machine on GCP and is often required in

gcloudcommands to identify the instance for a command - VMs are infrastructure components

- this includes the "Compute Engine" instances for our application services as well as the

MySQL Cloud SQL instance for our

mysqlservice and the Redis Memory Store instance for ourredisservice

In this tutorial we use "one VM per service" and can thus use a 1-to-1 mapping from service name

to VM name. As a convention, we use almost the same name, i.e. the php-fpm service runs on the

Compute Engine VM instance with the name php-fpm-vm. Using the -vm suffix avoids confusion

and ensures that we don't create an unintended coupling between the service name and the VM name.

Using the exact same name (service name = php-fpm and VM name = php-fpm) has lead to

problems for me in the past, because

the name of a MySQL Cloud SQL instance used to be blocked even after deletion for one week,

so it might not even be possible to use that exact name.

I have defined this mapping as a variable in .make/variables.env as follows:

# must match the names used in the docker-composer.yml files

DOCKER_SERVICE_NAME_NGINX:=nginx

DOCKER_SERVICE_NAME_PHP_BASE:=php-base

DOCKER_SERVICE_NAME_PHP_FPM:=php-fpm

DOCKER_SERVICE_NAME_PHP_WORKER:=php-worker

DOCKER_SERVICE_NAME_APPLICATION:=application

DOCKER_SERVICE_NAME_MYSQL:=mysql

DOCKER_SERVICE_NAME_REDIS:=redis

# VM / instance names

VM_NAME_APPLICATION=$(DOCKER_SERVICE_NAME_APPLICATION)-vm

VM_NAME_PHP_FPM=$(DOCKER_SERVICE_NAME_PHP_FPM)-vm

VM_NAME_PHP_WORKER=$(DOCKER_SERVICE_NAME_PHP_WORKER)-vm

VM_NAME_NGINX=$(DOCKER_SERVICE_NAME_NGINX)-vm

VM_NAME_MYSQL=$(DOCKER_SERVICE_NAME_MYSQL)-vm

VM_NAME_REDIS=$(DOCKER_SERVICE_NAME_REDIS)-vm

# Helpers

ALL_VM_SERVICE_NAMES=$(VM_NAME_APPLICATION):$(DOCKER_SERVICE_NAME_APPLICATION) $(VM_NAME_PHP_FPM):$(DOCKER_SERVICE_NAME_PHP_FPM) $(VM_NAME_PHP_WORKER):$(DOCKER_SERVICE_NAME_PHP_WORKER) $(VM_NAME_NGINX):$(DOCKER_SERVICE_NAME_NGINX)

The ALL_VM_SERVICE_NAMES variables is used for instance for

Retrieving all IP addresses if the VMs to create the service-ips file.

Appendix: Changes in the codebase

This section is mostly relevant if you have been following the previous tutorials. It explains some changes that have been introduced for this part.

Add a make dev-init target

A new dev-init target was added in a new sub makefile at .make/00-00-development-setup.mk.

It simplifies the setup of the tutorial repository when it has been freshly cloned.

I realized that there are quite some things to keep in mind in this case (e.g. set up various

.env files, decrypt the secrets, install composer dependencies, etc.). I believe this can lead

to a bad experience if you start with "this" part of the tutorial, as a lot of those setup

things are done in previous parts.

Update the gcloud targets

So far, the targets in .make/03-00-gcp.mk could assume a single GCP_VM_NAME variable as

there was only one Compute Instance VM. Now, we have

one VM per service and thus need to pass the

VM_NAME as a required argument to most VM related targets (i.e. GCP_VM_NAME was replaced by

VM_NAME).

Example: Logging into a VM via gcp-ssh-login now requires the VM_NAME, because

we need to define in which exact VM we want to log in

.PHONY: gcp-ssh-login

gcp-ssh-login: validate-gcp-variables ## Log into a VM via IAP tunnel

@$(if $(VM_NAME),,$(error "VM_NAME is undefined"))

gcloud compute ssh $(VM_NAME) --project $(GCP_PROJECT_ID) --zone $(GCP_ZONE) --tunnel-through-iap

# Example to log into the php-fpm VM:

# make gcp-ssh-login VM_NAME=php-fpm-vm

In addition, I have added a dedicated target to activate the mater service account, as it is

required for some actions (like retrieving the redis AUTH string - see

Redis Memorystory Setup):

.PHONY: gcp-init

gcp-init: validate-gcp-variables ## Initialize the `gcloud` cli and authenticate docker with the keyfile defined via SERVICE_ACCOUNT_KEY_FILE.

@$(if $(SERVICE_ACCOUNT_KEY_FILE),,$(error "SERVICE_ACCOUNT_KEY_FILE is undefined"))

gcloud auth activate-service-account --key-file="$(SERVICE_ACCOUNT_KEY_FILE)" --project="$(GCP_PROJECT_ID)"

.PHONY: gcp-init-deployment-account

gcp-init-deployment-account: validate-gcp-variables ## Initialize the `gcloud` cli with the deployment service account

@$(if $(GCP_DEPLOYMENT_SERVICE_ACCOUNT_KEY_FILE),,$(error "GCP_DEPLOYMENT_SERVICE_ACCOUNT_KEY_FILE is undefined"))

"$(MAKE)" gcp-init SERVICE_ACCOUNT_KEY_FILE=$(GCP_DEPLOYMENT_SERVICE_ACCOUNT_KEY_FILE)

cat "$(GCP_DEPLOYMENT_SERVICE_ACCOUNT_KEY_FILE)" | docker login -u _json_key --password-stdin https://gcr.io

.PHONY: gcp-init-master-account

gcp-init-master-account: validate-gcp-variables ## Initialize the `gcloud` cli with the master service account

@$(if $(GCP_MASTER_SERVICE_ACCOUNT_KEY_FILE),,$(error "GCP_MASTER_SERVICE_ACCOUNT_KEY_FILE is undefined"))

"$(MAKE)" gcp-init SERVICE_ACCOUNT_KEY_FILE=$(GCP_MASTER_SERVICE_ACCOUNT_KEY_FILE)

As part of this change, the variable GCP_DEPLOYMENT_SERVICE_ACCOUNT_KEY was renamed to

GCP_DEPLOYMENT_SERVICE_ACCOUNT_KEY_FILE and GCP_MASTER_SERVICE_ACCOUNT_KEY_FILE was added.

Add additional make variables

The following variables have been added:

GCP_REGION=us-central1

The region is required to create

- the Cloud Router and Cloud NAT gateway

- the MySQL Cloud SQL instance

- the Redis Memoerystore instance and retrieve its private IP address

GCP_DEPLOYMENT_SERVICE_ACCOUNT_KEY_FILE

GCP_MASTER_SERVICE_ACCOUNT_KEY_FILE

See previous section Update the gcloud targets.

DOCKER_SERVICE_NAME_...

VM_NAME_...

ALL_VM_SERVICE_NAMES

See section Mapping service names to VM names.

Use make to execute infrastructure and deployment targets in parallel

We have already learned about the capability of make to run targets in parallel with the -j

flag in

Set up PHP QA tools: Parallel execution and a helper target:

.PHONY: foo

foo:

"$(MAKE)" -j target-1 target-2

This is particularly helpful when dealing with I/O heavy targets, e.g. when making API calls. This is great, because we are making a lot of those, e.g. during the creation of the infrastructure but also during the deployment of the application. Unfortunately, it's not possible to pass individual arguments per target, i.e. in the following example

.PHONY: infrastructure-setup-all

infrastructure-setup-all: ## Setup all VMs

"$(MAKE)" -j --output-sync=target infrastructure-setup-vm VM_NAME=application-vm \

infrastructure-setup-vm VM_NAME=php-fpm-vm

the value of VM_NAME would always be php-fpm-vm. Thus, we must define each target that

should run in parallel individually like this:

.PHONY: infrastructure-setup-vm

infrastructure-setup-vm: ## Setup the VM specified via VM_NAME. Usage: make infrastructure-setup-vm VM_NAME=php-worker ARGS=""

bash .infrastructure/setup-vm.sh $(GCP_PROJECT_ID) $(VM_NAME) $(ARGS)

.PHONY: infrastructure-setup-vm-application

infrastructure-setup-vm-application:

"$(MAKE)" --no-print-directory infrastructure-setup-vm VM_NAME=$(VM_NAME_APPLICATION)

.PHONY: infrastructure-setup-vm-php-fpm

infrastructure-setup-vm-php-fpm:

"$(MAKE)" --no-print-directory infrastructure-setup-vm VM_NAME=$(VM_NAME_PHP_FPM)

.PHONY: infrastructure-setup-all

infrastructure-setup-all: ## Setup all VMs

"$(MAKE)" -j --output-sync=target infrastructure-setup-vm-application \

infrastructure-setup-vm-php-fpm

This introduces some typing overhead, but I didn't find a better way to do this, yet.

In theory, we could also use

other ways to run the targets in parallel,

e.g. by running the process in the background via &

infrastructure-setup-all: ## Provision all VMs

for vm_name in $(VM_NAME_APPLICATION) $(VM_NAME_PHP_FPM); do \

$$(make infrastructure-provision-vm VM_NAME="$$vm_name") & \

done; \

wait; \

echo "ALL DONE"

But: This doesn't give us any way to

synchronize the output as we can do with make via --output-sync=target

so that the output quickly becomes quite messy. Using

GNU parallel might be an option, but it doesn't come

natively e.g. on Windows and would be a dependency that needed to be installed by all developers

(which we try to avoid as much as possible - see

Use git-secret to encrypt secrets: Local git-secret and gpg setup).

Wrapping up

Congratulations, you made it! If some things are not completely clear by now, don't hesitate to

leave a comment. You should now be ready to create a production ready infrastructure on GCP

including multiple VMs and managed services for mysql and redis.

In the next part of this tutorial, we will

deploy a dockerized PHP application "to production" via docker (without compose) on multiple VMs.

Please subscribe to the RSS feed or via email to get automatic notifications when this next part comes out :)

Wanna stay in touch?

Since you ended up on this blog, chances are pretty high that you're into Software Development (probably PHP, Laravel, Docker or Google Big Query) and I'm a big fan of feedback and networking.

So - if you'd like to stay in touch, feel free to shoot me an email with a couple of words about yourself and/or connect with me on LinkedIn or Twitter or simply subscribe to my RSS feed or go the crazy route and subscribe via mail and don't forget to leave a comment :)

Subscribe to posts via mail