In the ninth part of this tutorial series on developing PHP on Docker we will

deploy our dockerized PHP application to a production environment (a GCP Compute Instance VM)

and run it via docker compose as a proof of concept.

All code samples are publicly available in my

Docker PHP Tutorial repository on Github.

You find the branch with the final result of this tutorial at

part-9-deploy-docker-compose-php-gcp-poc.

All published parts of the Docker PHP Tutorial are collected under a dedicated page at Docker PHP Tutorial. The previous part was Create a GCP Compute Instance VM for dockerized PHP Apps.

If you want to follow along, please subscribe to the RSS feed or via email to get automatic notifications when the next part comes out :)

Table of contents

- Introduction

- Deployment workflow

- Codebase changes

- Docker changes

- Makefile changes

- Wrapping up

Introduction

In the previous tutorial

Create a GCP compute instance VM to run dockerized applications

we have created a Compute Instance VM on GCP and prepared it to run docker containers. For

this tutorial I made a small adjustment and changed the machine type from e2-micro to

e2-small because we need a little more memory to run the whole application.

In this tutorial, we will use the VM as a production environment, i.e. we will

- prepare our

dockersetup for production usage - build and push the production-ready

dockerimages to the GCP registry from our local system - pull and start the images on the VM

The whole process will be defined in a single make target called deploy.

To try it yourself:

- create an account on GCP, a project and a master service account

- create a keyfile

for the service account, name it

gcp-master-service-account-key.jsonand move it to the root of the repository

- create a keyfile

for the service account, name it

- checkout branch part-9-deploy-docker-compose-php-gcp-poc

- update the

.make/variables.envfile with your GCP project id and VM name - initialize local docker setup via

- copying the secret gpg key to the root of the repository via

bash cp .tutorial/secret.gpg.example ./secret.gpg - initializing the shared variables via

make make-init - building the docker setup via

make docker-build - start the docker setup via

make docker-up - decrypt the secrets via

make gpg-init make secret-decrypt

- copying the secret gpg key to the root of the repository via

- run the script located at

.infrastructure/setup-gcp.shto create a GCP VM - run

make deploy IGNORE_UNCOMMITTED_CHANGES=trueto deploy the application - run

make deployment-setup-db-on-vmto run the DB migrations - run

make gcp-show-ipto retrieve the public IP of the VM and open it in a browser

project_id=my-new-project100

vm_name=my-vm-name

git checkout part-9-deploy-docker-compose-php-gcp-poc

sed -i "s/pl-dofroscra-p/${project_id}/g" .make/variables.env

sed -i "s/dofroscra-test/${vm_name}/g" .make/variables.env

cp .tutorial/secret.gpg.example ./secret.gpg

make make-init

make docker-build

make docker-up

make gpg-init

make secret-decrypt

bash .infrastructure/setup-gcp.sh $project_id $vm_name

make deploy IGNORE_UNCOMMITTED_CHANGES=true

make deployment-setup-db-on-vm

echo "http://$(make -s gcp-show-ip)/"

Note: It can take a couple of minutes until the infrastructure is up and running.

docker compose should not be

used on a single VM in a production setup, because one huge benefit of docker is the separation

of services into horizontally scalable containers. Using a single VM would pretty much defeat

the purpose. In addition, we will use

docker containers for the

mysql and redis databases. It would be far better to use

managed services like

Memorystore for redis and

Cloud SQL for mysql

so that we don't have to deal with backups etc. ourselves.

Note: We will tackle those issues and "remove" the POC status in the next part of the tutorial series.

Deployment workflow

As a precondition we expect that a GCP VM is up and running. The basic idea of the deployment is:

- build the

dockerimages using theprodenvironment and push them to the remote registry - log into the VM and pull the images

- use

docker composeon the VM to start thedockersetup

This shouldn't be too complicated - we already do the same thing locally, don't we? In theory: Yes. In practice, there is one major difference: Locally, we have access to our repository, including the files for running

- the

dockersetup (=> the.dockerdirectory) - the

makesetup to control the application (=> theMakefileand the.makedirectory)

Fortunately we can solve this issue easily and provide a single make target named deploy

that will take care of everything.

The deploy target

The deploy target runs all necessary commands for a deployment:

Run safeguard checks to avoid code drift

@printf "$(GREEN)Switching to 'local' environment$(NO_COLOR)\n"

@make --no-print-directory make-init

@printf "$(GREEN)Starting docker setup locally$(NO_COLOR)\n"

@make --no-print-directory docker-up

@printf "$(GREEN)Verifying that there are no changes in the secrets$(NO_COLOR)\n"

@make --no-print-directory gpg-init

@make --no-print-directory deployment-guard-secret-changes

@printf "$(GREEN)Verifying that there are no uncommitted changes in the codebase$(NO_COLOR)\n"

@make --no-print-directory deployment-guard-uncommitted-changes

Make sure the gcloud cli is initialized with the GCP deployment service

account and that this account is also used to authenticate docker. Otherwise, we won't be able

to push images to our GCP container registry.

@printf "$(GREEN)Initializing gcloud$(NO_COLOR)\n"

@make --no-print-directory gcp-init

Enable the prod environment for the make setup, see section

ENV based docker compose config

@printf "$(GREEN)Switching to 'prod' environment$(NO_COLOR)\n"

@make --no-print-directory make-init ENVS="ENV=prod TAG=latest"

Create the build-info file

@printf "$(GREEN)Creating build information file$(NO_COLOR)\n"

@make --no-print-directory deployment-create-build-info-file

Build and push the docker images

@printf "$(GREEN)Building docker images$(NO_COLOR)\n"

@make --no-print-directory docker-build

@printf "$(GREEN)Pushing images to the registry$(NO_COLOR)\n"

@make --no-print-directory docker-push

@printf "$(GREEN)Creating the deployment archive$(NO_COLOR)\n"

@make deployment-create-tar

Run deployment commands on the VM

@printf "$(GREEN)Copying the deployment archive to the VM and run the deployment$(NO_COLOR)\n"

@make --no-print-directory deployment-run-on-vm

Cleanup the deployment by removing the local deployment archive and enabling the default

environment (local) for the make setup.

@printf "$(GREEN)Clearing deployment archive$(NO_COLOR)\n"

@make --no-print-directory deployment-clear-tar

@printf "$(GREEN)Switching to 'local' environment$(NO_COLOR)\n"

@make --no-print-directory make-init

Avoiding code drift

The term "code drift" is derived from configuration drift, which indicates the (subtle) differences in configuration between environments:

If you've ever heard an engineer lamenting (or sometimes arrogantly proclaiming) "well, it works on my machine" then you have been witness to configuration drift.

In our case it refers to differences between our git repository and the code in the docker

images as well as changes between the decrypted and encrypted secret files. These problems

can occur, because we are currently

executing the deployment from our local machine and we might have made some

changes in the codebase when we build the docker images that are not yet reflected in git. The

build context sent to the docker daemon would then be different from the git repository resp.

the encrypted .secret files. This can lead to all sorts of hard-to-debug quirks and should

thus be avoided.

When we deploy later from the CI pipelines, those problems simply won't occur, because the whole codebase will be identical with the git repository - but I really do NOT want to lose the ability to deploy code from my local system (devs that went through Gitlab / Github downtimes will understand...)

Corresponding checks are implemented via the deployment-guard-uncommitted-changes and

deployment-guard-secret-changes targets that exit with exit 1 (a non-zero status code) which in

turn makes the deploy target stop/fail.

IGNORE_SECRET_CHANGES?=

.PHONY: deployment-guard-secret-changes

deployment-guard-secret-changes: ## Check if there are any changes between the decrypted and encrypted secret files

if ( ! make secret-diff || [ "$$(make secret-diff | grep ^@@)" != "" ] ) && [ "$(IGNORE_SECRET_CHANGES)" == "" ] ; then \

printf "Found changes in the secret files => $(RED)ABORTING$(NO_COLOR)\n\n"; \

printf "Use with IGNORE_SECRET_CHANGES=true to ignore this warning\n\n"; \

make secret-diff; \

exit 1; \

fi

@echo "No changes in the secret files!"

make secret-diff is used

to check for differences between decrypted and encrypted secrets.

! make secret-diff checks if the commands exits with a non-zero exit code. This happens for

instance, when the secrets have not been decrypted yet. The error is

git-secret: abort: file not found. Consider using 'git secret reveal': <missing-file>

If the command doesn't fail, the changes are displayed in a diff format, e.g.

--- /dev/fd/63

+++ /var/www/app/.secrets/shared/passwords.txt

@@ -1 +1,2 @@

my_secret_password

+1

foo

We use grep ^@@ to check the existence of a "line that starts with @@" to identify a change.

If no changes are found, the info "No changes in the secret files!" is printed. Otherwise, a

warning is shown. The check an be suppressed by passing IGNORE_SECRET_CHANGES=true.

IGNORE_UNCOMMITTED_CHANGES?=

.PHONY: deployment-guard-uncommitted-changes

deployment-guard-uncommitted-changes: ## Check if there are any git changes and abort if so. The check can be ignore by passing `IGNORE_UNCOMMITTED_CHANGES=true`

if [ "$$(git status -s)" != "" ] && [ "$(IGNORE_UNCOMMITTED_CHANGES)" == "" ] ; then \

printf "Found uncommitted changes in git => $(RED)ABORTING$(NO_COLOR)\n\n"; \

printf "Use with IGNORE_UNCOMMITTED_CHANGES=true to ignore this warning\n\n"; \

git status -s; \

exit 1; \

fi

@echo "No uncommitted changes found!"

For deployment-guard-uncommitted-changes we use git status -s to check for any uncommitted

changes. If no changes are found the info "No uncommitted changes found!" is printed.

Otherwise, a warning is shown. The check an be suppressed by passing

IGNORE_UNCOMMITTED_CHANGES=true.

The build-info file

When testing the deployments I often needed to identify small bugs in the code. The more complex the whole process gets, the more things can go wrong and the more "stuff needs to be checked". One of them is the code drift mentioned in the previous section, btw.

To make my life a little easier, I added a file called build-info that contains information

about the build and will be stored in the docker images - allowing me to inspect the file

later, see also section Show the build-info.

The file is created via deployment-create-build-info-file target

.PHONY: deployment-create-build-info-file

deployment-create-build-info-file: ## Create a file containing version information about the codebase

@echo "BUILD INFO" > ".build/build-info"

@echo "==========" >> ".build/build-info"

@echo "User :" $$(whoami) >> ".build/build-info"

@echo "Date :" $$(date --rfc-3339=seconds) >> ".build/build-info"

@echo "Branch:" $$(git branch --show-current) >> ".build/build-info"

@echo "" >> ".build/build-info"

@echo "Commit" >> ".build/build-info"

@echo "------" >> ".build/build-info"

@git log -1 --no-color >> ".build/build-info"

The file is created on the host system under .build/build-info and then

copied to ./build-info in the Dockerfile of the php-base image.

To execute a shell command via $(command),

the $ has to be escaped with another $, to

not be interpreted by make as a variable. Example:

some-target:

$$(command)

FYI: I learned that

make converts all new lines in spaces when they are echo'd

because I initially used

@echo $$(git log -1 --no-color) >> ".build/build-info"

instead of

@git log -1 --no-color >> ".build/build-info"

which would remove all new lines.

A final file build-info file looks like this:

BUILD INFO

==========

User : Pascal

Date : 2022-05-22 17:10:21+02:00

Branch: part-9-deploy-docker-compose-php-gcp-poc

Commit

------

commit c47464536613874d192696d93d3c97b138c7a6be

Author: Pascal Landau <[email protected]>

Date: Sun May 22 17:10:15 2022 +0200

Testing the new `build-info` file

Build and push the docker images

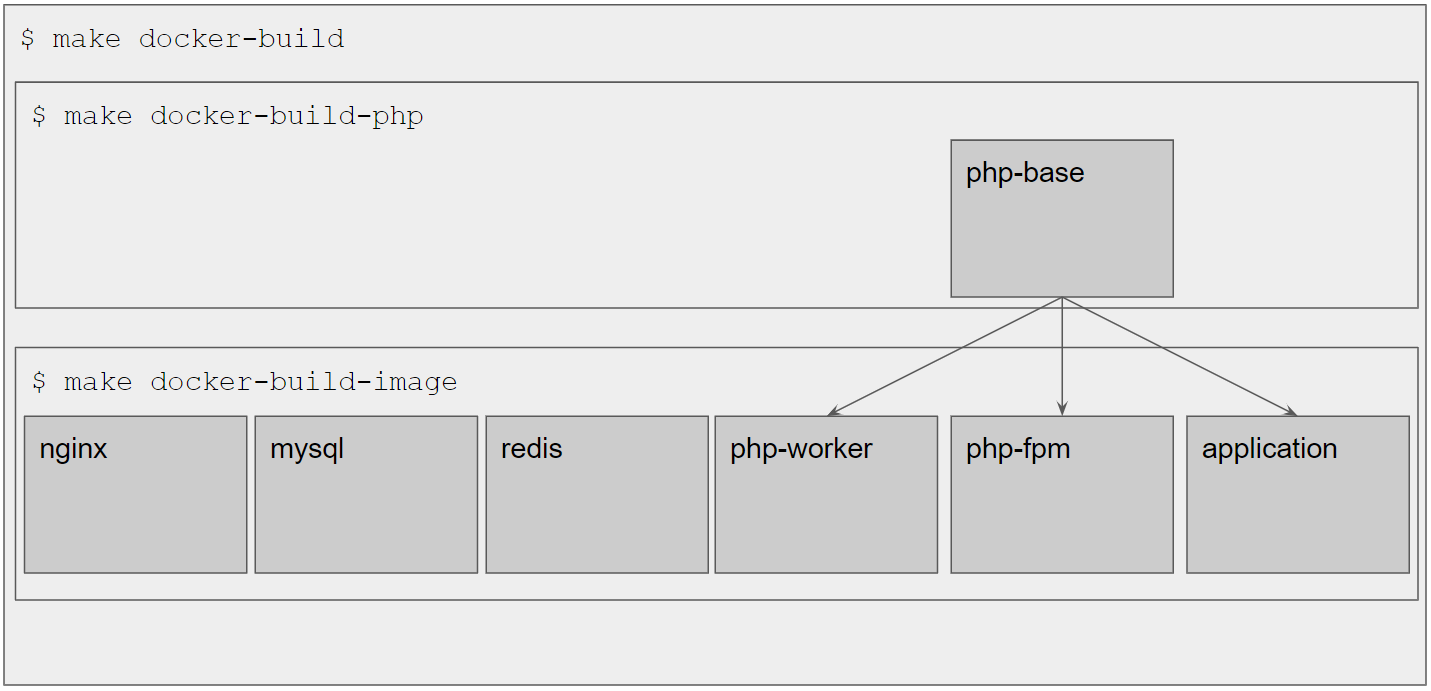

make is initialized with ENV=prod, i.e. calling make docker-build will use

the correct docker compose config for building production

images. In addition, we have adjusted the DOCKER_REGISTRY to gcr.io/pl-dofroscra-p in the

.make/variables.env file, so that the images will

immediately be tagged correctly

as

gcr.io/pl-dofroscra-p/dofroscra/$service-prod

# e.g. for `php-base`

gcr.io/pl-dofroscra-p/dofroscra/php-base-prod

Example:

$ make docker-build

ENV=prod TAG=latest DOCKER_REGISTRY=gcr.io/pl-dofroscra-p DOCKER_NAMESPACE=dofroscra APP_USER_NAME=application APP_GROUP_NAME=application docker compose -p dofroscra_prod --env-file ./.docker/.env -f ./.docker/docker-compose/docker-compose-php-base.yml build php-base

#1 [internal] load build definition from Dockerfile

# ...

$ docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

gcr.io/pl-dofroscra-p/dofroscra/php-fpm-prod latest 2be3bec977de 24 seconds ago 147MB

gcr.io/pl-dofroscra-p/dofroscra/php-worker-prod latest 6dbf14d1b329 25 seconds ago 181MB

gcr.io/pl-dofroscra-p/dofroscra/php-base-prod latest 9164976a78a6 32 seconds ago 130MB

gcr.io/pl-dofroscra-p/dofroscra/application-prod latest 377fdee0f12a 32 seconds ago 130MB

gcr.io/pl-dofroscra-p/dofroscra/nginx-prod latest 42dd1608d126 24 seconds ago 23.5MB

Thanks to the image name, we can also immediately push the images to the remote registry via

make docker-push. Note, that we see a lot of Layer already exists infos in the console

output for the php-fpm and php-worker images. This is due to the fact that we use

a common php-base base image

for application, php-fpm and php-worker, i.e. those images have a lot of layers in

common and only the layers of application are pushed. docker uses the

layer hash

to identify which layers already exist.

$ make docker-push

ENV=prod TAG=latest DOCKER_REGISTRY=gcr.io/pl-dofroscra-p DOCKER_NAMESPACE=dofroscra APP_USER_NAME=application APP_GROUP_NAME=application docker compose -p dofroscra_prod --env-file ./.docker/.env -f ./.docker/docker-compose/docker-compose.local.ci.prod.yml -f ./.docker/docker-compose/docker-compose.local.prod.yml -f ./.docker/docker-compose/docker-compose.prod.yml push

mysql Skipped

redis Skipped

Pushing application: c8f4416c4383 Preparing

#...

Pushing application: 6bbfa8829d07 Pushing [==================================================>] 3.584kB

#...

Pushing php-worker: 6bbfa8829d07 Layer already exists

#...

Pushing php-fpm: 6bbfa8829d07 Layer already exists

Create the deployment archive

As described in the introduction of the Deployment workflow, we need to

make our make and docker setup somehow available on the VM. We will solve this issue by

creating a tar archive with all necessary files locally and

transfer it to the VM.

The archive is created via the deployment-create-tar target

.PHONY: deployment-create-tar

deployment-create-tar:

# create the build directory

rm -rf .build/deployment

mkdir -p .build/deployment

# copy the necessary files

mkdir -p .build/deployment/.docker/docker-compose/

cp -r .docker/docker-compose/ .build/deployment/.docker/

cp -r .make .build/deployment/

cp Makefile .build/deployment/

cp .infrastructure/scripts/deploy.sh .build/deployment/

# make sure we don't have any .env files in the build directory (don't wanna leak any secrets) ...

find .build/deployment -name '.env' -delete

# ... apart from the .env file we need to start docker

cp .secrets/prod/docker.env .build/deployment/.docker/.env

# create the archive

tar -czvf .build/deployment.tar.gz -C .build/deployment/ ./

The recipe uses the .build/deployment directory as a temporary location to store all necessary

files, i.e.

- the

docker composeconfig files in.docker/docker-compose/ - the

Makefileand the.makedirectory in the root of the codebase for themakesetup - the

.infrastructure/scripts/deploy.shscript to run the deployment

In addition, we copy the .secrets/prod/docker.env file to use it as the

.env file for docker compose. Caution: This only works, because we have

verified previously that there are no changes between the decrypted and encrypted .secret files

(which also means that .secrets/prod/docker.env is already decrypted).

Once all files are copied, the whole directory is added to the .build/deployment.tar.gz

archive via

tar -czvf .build/deployment.tar.gz -C .build/deployment/ ./

The -C .build/deployment/ option makes sure that

the directory structure is retained when extracting the archive.

For the remaining options take a look at

How to create tar.gz file in Linux using command line.

Deployment commands on the VM

Once the creation of the deployment archive is done, we can

transfer the resulting .build/deployment.tar.gz file to the VM, extract it and run the deployment

script. All of that is done via the deployment-run-on-vm target

# directory on the VM that will contain the files to start the docker setup

CODEBASE_DIRECTORY=/tmp/codebase

.PHONY: deployment-run-on-vm

deployment-run-on-vm:## Run the deployment script on the VM

"$(MAKE)" -s gcp-scp-command SOURCE=".build/deployment.tar.gz" DESTINATION="deployment.tar.gz"

"$(MAKE)" -s gcp-ssh-command COMMAND="sudo rm -rf $(CODEBASE_DIRECTORY) && sudo mkdir -p $(CODEBASE_DIRECTORY) && sudo tar -xzvf deployment.tar.gz -C $(CODEBASE_DIRECTORY) && cd $(CODEBASE_DIRECTORY) && sudo bash deploy.sh"

Under the hood, the target uses the

gcp-scp-command and gcp-ssh-command targets. The deployment archive is

extracted in /tmp/codebase via

sudo rm -rf /tmp/codebase && sudo mkdir -p /tmp/codebase && sudo tar -xzvf deployment.tar.gz -C /tmp/codebase

and then the deployment script is executed

cd /tmp/codebase && sudo bash deploy.sh

All of those commands are run in a single invocation of gcp-ssh-command, because there's a

certain overhead involved when tunneling commands via IAP, i.e. each invocation takes a couple

of seconds.

The deploy.sh script

The actual deployment is done "on the VM" via the .infrastructure/scripts/deploy.sh script

#!/usr/bin/env bash

echo "Retrieving secrets"

make gcp-secret-get SECRET_NAME=GPG_KEY > secret.gpg

GPG_PASSWORD=$(make gcp-secret-get SECRET_NAME=GPG_PASSWORD)

echo "Creating compose-secrets.env file"

echo "GPG_PASSWORD=$GPG_PASSWORD" > compose-secrets.env

echo "Initializing the codebase"

make make-init ENVS="ENV=prod TAG=latest"

echo "Pulling images on the VM from the registry"

make docker-pull

echo "Stop the containers on the VM"

make docker-down || true

echo "Start the containers on the VM"

make docker-up

The script is located at the root of the codebase and

- will first retrieve the

GPG_KEYand theGPG_PASSWORDvalues that we created previously in the Secret Manager- the

GPG_KEYis stored in the filesecret.gpgin the root of the codebase so that it is picked up automatically when initializinggpg> [...] > and the private key has to be named secret.gpg and put in the root of the codebase. - the

GPG_PASSWORDis stored in the bash variable$GPG_PASSWORDand from there stored in a file calledcompose-secrets.envasdotenv GPG_PASSWORD=$GPG_PASSWORDThis file is used in thedocker-compose.prod.ymlfile asenv_filefor the php docker containers, so that theGPG_PASSWORDbecomes available in thedecrypt-secrets.shscript used in theENTRYPOINTof thephp-baseimage

- the

- then the

makesetup is initialized for theprodenvironment viabash make-init ENVS="ENV=prod TAG=latest"so that all subsequentdocker-*targets use the correct configuration - and finally, the

dockerimages we pushed in step "Build and push thedockerimages" are pulled, any running containers are stopped and the wholedockersetup is started with the new images- FYI:

docker compose downwould fail (exit with a non-zero status code) if no containers are running. Since this is fine for us (we simply want to ensure that no containers are running), the command is OR'd viamake docker-down || trueso that the script won't stop if that happens.

- FYI:

Codebase changes

Before we dive into the docker stuff, let's quickly talk about some cleanup work in the

codebase that you can get via

part-9-deploy-docker-compose-php-gcp-poc.

Restructure the codebase

The .build directory

We already know this directory from the previous tutorial where we used it as a temporary directory to collect build artifacts from the CI pipeline. Now, we will make use of it again as a temporary directory to

- prepare the creation of the deployment archive

- create a

build-infofile to pass it to thedockerdaemon in the build context

The files in the directory are ignored via .gitignore as they are only temporarily required

# File: .gitignore

.build/*

However, since the build-info file must be passed to docker, we will have a slight deviation

between the .gitignore and the .dockerignore file.

# File: .dockerignore

.build/*

# kept files

!.build/build-info

I'm mentioning this here specifically, because we usually

strive for a parity between .gitignore and .dockerignore.

The .secrets directory

Since we will store all secrets for all environments in the codebase, we will organize them

by environment as subdirectories in a new .secrets directory:

.secrets/

├── ci

│ └── ci-secret.txt.secret

├── prod

│ ├── app.env.secret

│ └── docker.env.secret

└── shared

└── passwords.txt.secret

This will also make it easier to

pick the correct files per environment when building the docker image

and

select them for decryption in the ENTRYPOINT.

The .secrets/shared/ directory contains all secret files that are required by all

environments, whereas .secrets/ci/ contains only ci secrets and .secrets/prod/ contains only

prod secrets, respectively.

In our codebase there are already two files that contain actual secrets: The .env file and

the .docker/.env file. Both of them contain the credentials for mysql and redis, and the

.env file also contains the APP_KEY that

is used by Laravel to encrypt cookies

Laravel uses the [

APP_KEY] for all encrypted cookies, including the session cookie, before handing them off to the user's browser, and it uses it to decrypt cookies read from the browser.

# File: .env

APP_KEY=base64:C8X1hLE2bpok8OS+bJ1cTB9wNASJNRLibqUrDq2ls4Q=

DB_PASSWORD=production_secret_mysql_root_password

REDIS_PASSWORD=production_secret_redis_password

# File: .docker/.env

MYSQL_PASSWORD=production_secret

MYSQL_ROOT_PASSWORD=production_secret_mysql_root_password

REDIS_PASSWORD=production_secret_redis_password

I have created the encrypted .secret files by moving the unencrypted files to the .secrets/

directory and made sure to add them to the .gitignore file with the rules

# ...

.secrets/*/*

!**/*.secret

Then I ran

make secret-add FILES=".secrets/*/*"

make secret-encrypt

$ make secret-add FILES=".secrets/*/*"

"C:/Program Files/Git/mingw64/bin/make" -s git-secret ARGS="add .secrets/*/*"

git-secret: 4 item(s) added.

$ make secret-encrypt

"C:/Program Files/Git/mingw64/bin/make" -s git-secret ARGS="hide"

git-secret: done. 4 of 4 files are hidden.

The .tutorial directory

I have mentioned before, that

I would normally not store secret gpg keys in the repository,

but I'm still doing it in this tutorial so that it's easier to follow along. To make clear

which files are affected by this "exception to the rule", I have moved them in a dedicated

.tutorial directory:

.tutorial/

├── secret-ci-protected.gpg.example

├── secret-production-protected.gpg.example

└── secret.gpg.example

The .infrastructure directory

The .infrastructure directory contains all files that are used to manage the infrastructure and

deployments. The directory was introduced in the

previous part and looks as follows

.infrastructure/

├── scripts/

│ ├── deploy.sh

│ └── provision.sh

└── setup-gcp.sh

- the

scriptsdirectory contains files that are transferred to and then executed on the VMdeploy.shis a script to perform all necessary deployment steps on the VM and is explained in more detail in section Deployment commands on the VMprovision.shis a script to installdockeranddocker composeon the VM and contains the commands that are explained in section Installingdockeranddocker compose

setup-gcp.shis run to set up a VM initially and was described in more detail under Putting it all together

Add a gpg key for production

We have created a new gpg key pair for the prod environment

when setting up the VM on GCP. The secret key is located

at .tutorial/secret-production-protected.gpg.example and the public key at

.dev/gpg-keys/production-public.gpg.

Show the build-info

As part of the deployment, we generate a build-info file that allows

us to understand "which version of the codebase lives inside a container". This file is located

at the root of the repository, and we expose it as the web route /info (via the php-fpm

container) and as the command info (via the application container).

routes/web.php

# ...

Route::get('/info', function () {

$info = file_get_contents(__DIR__."/../build-info");

return new \Illuminate\Http\Response($info, 200, ["Content-type" => "text/plain"]);

});

Show via curl http://localhost/info

routes/console.php

# ...

Artisan::command('info', function () {

$info = file_get_contents(__DIR__."/../build-info");

$this->line($info);

})->purpose('Display build information about the codebase');

Show via php artisan info

Optimize .gitignore

Laravel uses multiple .gitignore files to retain a directory structure, because

empty directories cannot be added to git.

This is a valid strategy, but it makes

understanding "what is actually ignored" more complex.

In addition, it makes it harder to

keep .gitignore and .dockerignore in sync,

because we can't simply "copy the contents of the ./.gitignore" any longer as it might not

contain all rules.

Thus, I have identified all directory-specific .gitignore files via

find . -path ./vendor -prune -o -name .gitignore -print

find . -path ./vendor -prune -o -name .gitignore -print

./.gitignore

./bootstrap/cache/.gitignore

./database/.gitignore

./storage/app/.gitignore

./storage/app/public/.gitignore

./storage/framework/.gitignore

./storage/framework/cache/.gitignore

./storage/framework/cache/data/.gitignore

./storage/framework/sessions/.gitignore

./storage/framework/testing/.gitignore

./storage/framework/views/.gitignore

./storage/logs/.gitignore

CAUTION: Usually, the files simply contain the rules

*

!.gitignore

The only exception is ./database/.gitignore which contains

*.sqlite*

TBH, I would consider this rather a bug, because this rule SHOULD actually live the main

.gitignore file. But for us it means, that we need to keep this rule as

database/*.sqlite*

Before we add them to the .gitignore file, it is important to understand

how git handles the gitignore file

An optional prefix "

!" which negates the pattern; any matching file excluded by a previous pattern will become included again. It is not possible to re-include a file if a parent directory of that file is excluded. Git doesn’t list excluded directories for performance reasons, so any patterns on contained files have no effect, no matter where they are defined.

In other words: Consider the following structure

storage/

└── app

├── .gitignore

└── public

└── .gitignore

We might be tempted to write the rules as

storage/app/*

!storage/app/.gitignore

!storage/app/public/.gitignore

But that won't work as expected:

storage/app/* ignores "everything" in the storage/app/ directory, so that git wouldn't even

look into the storage/app/public/ directory and thus wouldn't find the

storage/app/public/.gitignore file! In consequence, the rule !storage/app/public/.gitignore

doesn't have any affect and the file would not be added to the git repository.

Instead, I need to allow the public directory explicitly by adding the rule

!storage/app/public/ and ignore all files in it apart from the .gitignore file via

storage/app/public/*. So we are essentially saying:

- ignore all files in the

storage/app/directory - but NOT the

storage/app/public/directory ITSELF - though do still ignore all files IN the

storage/app/public/directory - but NOT the

storage/app/public/.gitignorefile

# ignore all files in the storage/app/ directory

storage/app/*

!storage/app/.gitignore

# but NOT the storage/app/public/ directory ITSELF

!storage/app/public/

# do still ignore all files IN storage/app/public/

storage/app/public/*

# but NOT the storage/app/public/.gitignore file

!storage/app/public/.gitignore

We can simplify the rules a little more via !**.gitignore, i.e. all gitignore files in any

directory should be included

storage/app/*

!storage/app/public/

storage/app/public/*

!**.gitignore

So the final rules for all directory-specific .gitignore files become

bootstrap/cache/*

database/*.sqlite*

storage/app/*

!storage/app/public/

storage/app/public/*

storage/framework/*

!storage/framework/cache/

storage/framework/cache/*

!storage/framework/cache/data/

storage/framework/cache/data/*

!storage/framework/sessions/

storage/framework/sessions/*

!storage/framework/testing/

storage/framework/testing/*

!storage/framework/views/

storage/framework/views/*

!storage/framework/logs/

storage/framework/logs/*

!**.gitignore

Caution: If Laravel changes the rules for their directory-specific .gitignore files, we

must adjust our rules as well! Luckily this usually only happens on major version upgrades, though.

The full .gitignore file becomes

**/*.env*

!.env.example

!.make/variables.env

.idea

.phpunit.result.cache

vendor/

secret.gpg

.gitsecret/keys/random_seed

.gitsecret/keys/pubring.kbx~

.secrets/*/*

!**/*.secret

gcp-service-account-key.json

gcp-master-service-account-key.json

# => directory-specific .gitignore files by Laravel

bootstrap/cache/*

database/*.sqlite*

storage/app/*

!storage/app/public/

storage/app/public/*

storage/framework/*

!storage/framework/cache/

storage/framework/cache/*

!storage/framework/cache/data/

storage/framework/cache/data/*

!storage/framework/sessions/

storage/framework/sessions/*

!storage/framework/testing/

storage/framework/testing/*

!storage/framework/views/

storage/framework/views/*

!storage/framework/logs/

storage/framework/logs/*

# => directory-specific .gitignore files from us

.build/*

# => keep ALL .gitignore files

!**.gitignore

It will be "synced" later with the .dockerignore file.

Docker changes

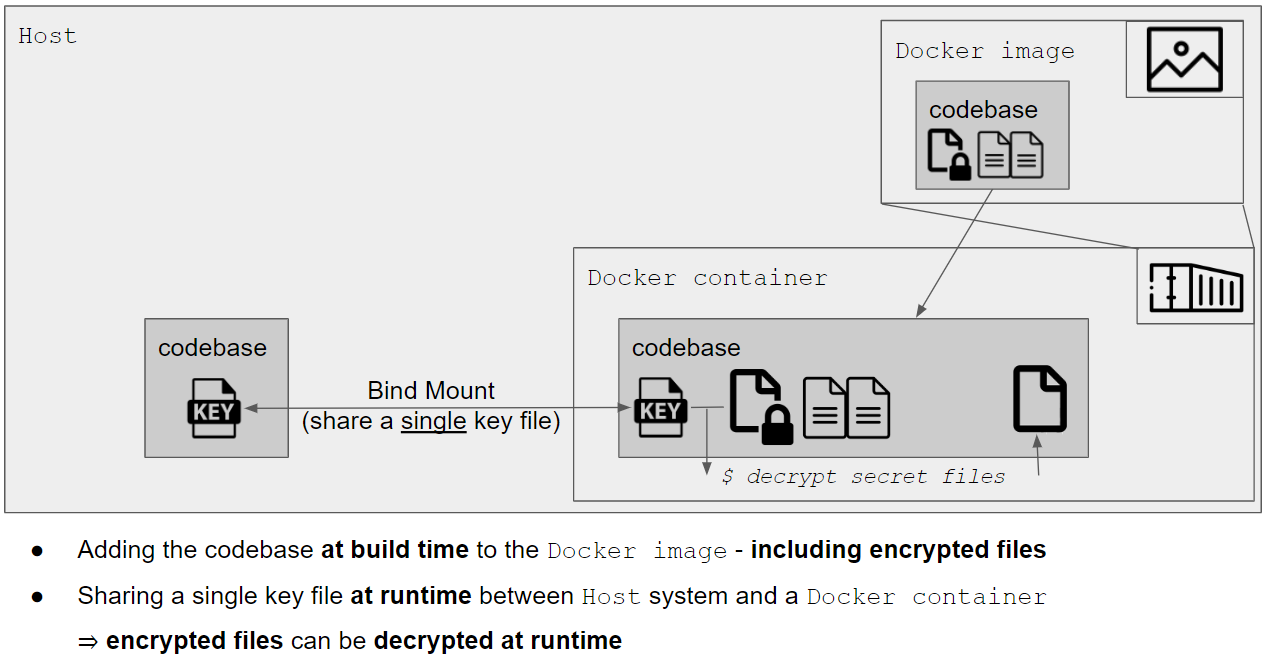

For this tutorial we will use docker compose to build the containers as well as to

run them "in production", i.e. on the GCP VM. The docker compose configuration will

essentially be a combination of the local and ci config from the previous tutorials:

- We will need all the services that

we already know from the

localenvironment, i.e.nginxmysqlredisphp-workerphp-fpmapplication

- The codebase will be baked into the image and we will bind mount a

gpgsecret key at runtime to decrypt the secrets - exactly as we did in the previous tutorial with thecienvironment

In the previous tutorial we have used the environment (ci) to

identify the necessary docker-compose.yml configuration files

and also

as a new build target in the Dockerfiles.

We'll stick to this process and add yet another environment called prod.

A .env file for prod

For our local and ci environments we didn't really care about the .env file that we used for

docker compose and have simply added a ready-to-use template at .docker/.env.example that is

NOT ignored by git. Even though the file contains credentials, e.g. for mysql and redis,

it's okay if those are "exposed" in the repository, because we won't store any production data

in those databases in local and ci.

For prod however, the situation is different: We certainly do NOT want to expose the

credentials. Luckily we already have git secret set up

and thus could simply

add an encrypted template file at .secrets/prod/docker.env. We will

later

decrypt the file and add it to the deployment archive

to transfer it to the VM

in order to start the docker setup there.

Updating the docker-compose.yml configuration files

We will use the same technique as before to "assemble" our docker compose configuration, i.e.

we use multiple compose files with environment specific settings. For prod, we use the files

docker-compose.local.ci.prod.yml- contains config settings for all environments

docker-compose.local.prod.yml- contains config settings only for

localandprod

- contains config settings only for

docker-compose.prod.yml- contains config settings only for

prod

- contains config settings only for

The assembling is once again performed via Makefile.

docker-compose.local.ci.prod.yml

This file was simply renamed from file docker-compose.local.ci.yml. It contains

networkandvolumedefinitionsnetworks: network: driver: ${NETWORKS_DRIVER?} volumes: mysql: name: mysql-${ENV?} driver: ${VOLUMES_DRIVER?} redis: name: redis-${ENV?} driver: ${VOLUMES_DRIVER?}- the build instructions for the

applicationserviceyaml application: image: ${DOCKER_REGISTRY?}/${DOCKER_NAMESPACE?}/application-${ENV?}:${TAG?} build: context: ../ dockerfile: ./images/php/application/Dockerfile target: ${ENV?} args: - BASE_IMAGE=${DOCKER_REGISTRY?}/${DOCKER_NAMESPACE?}/php-base-${ENV?}:${TAG?} - ENV=${ENV?} the configuration for the

mysqlandredisservicesmysql: image: mysql:${MYSQL_VERSION?} platform: linux/amd64 environment: - MYSQL_DATABASE=${MYSQL_DATABASE:-application_db} - MYSQL_USER=${MYSQL_USER:-application_user} - MYSQL_PASSWORD=${MYSQL_PASSWORD?} - MYSQL_ROOT_PASSWORD=${MYSQL_ROOT_PASSWORD?} - TZ=${TIMEZONE:-UTC} networks: - network healthcheck: test: mysqladmin ping -h 127.0.0.1 -u $$MYSQL_USER --password=$$MYSQL_PASSWORD timeout: 1s retries: 30 interval: 2s redis: image: redis:${REDIS_VERSION?} command: > --requirepass ${REDIS_PASSWORD?} networks: - network

docker-compose.local.prod.yml

This file is based on the

docker-compose.local.yml of the previous tutorial

without any settings for local development. It contains

build instructions for the

php-fpm,php-workerandnginxservices (because we didn't need those in the configuration for thecienvironment)php-fpm: image: ${DOCKER_REGISTRY?}/${DOCKER_NAMESPACE?}/php-fpm-${ENV?}:${TAG?} build: context: ../ dockerfile: ./images/php/fpm/Dockerfile target: ${ENV?} args: - BASE_IMAGE=${DOCKER_REGISTRY?}/${DOCKER_NAMESPACE?}/php-base-${ENV?}:${TAG?} - TARGET_PHP_VERSION=${PHP_VERSION?} php-worker: image: ${DOCKER_REGISTRY?}/${DOCKER_NAMESPACE?}/php-worker-${ENV?}:${TAG?} build: context: ../ dockerfile: ./images/php/worker/Dockerfile target: ${ENV?} args: - BASE_IMAGE=${DOCKER_REGISTRY?}/${DOCKER_NAMESPACE?}/php-base-${ENV?}:${TAG?} - PHP_WORKER_PROCESS_NUMBER=${PHP_WORKER_PROCESS_NUMBER:-4} nginx: image: ${DOCKER_REGISTRY?}/${DOCKER_NAMESPACE?}/nginx-${ENV?}:${TAG?} build: context: ../ dockerfile: ./images/nginx/Dockerfile target: ${ENV?} args: - NGINX_VERSION=${NGINX_VERSION?} - APP_CODE_PATH=${APP_CODE_PATH_CONTAINER?} ports: - "${NGINX_HOST_HTTP_PORT:-80}:80" - "${NGINX_HOST_HTTPS_PORT:-443}:443"port forwarding for the

nginxservice, because we want to forward incoming requests on the VM to thenginxdocker containernginx: ports: - "${NGINX_HOST_HTTP_PORT:-80}:80" - "${NGINX_HOST_HTTPS_PORT:-443}:443"volume configuration for the

mysqlandredisservicesmysql: volumes: - mysql:/var/lib/mysql redis: volumes: - redis:/data

The following settings are only retained in docker-compose.local.yml

bind mount of the codebase

application|php-fpm|php-worker|nginx: volumes: - ${APP_CODE_PATH_HOST?}:${APP_CODE_PATH_CONTAINER?}port sharing with the host system (excluding nginx ports)

application: ports: - "${APPLICATION_SSH_HOST_PORT:-2222}:22" mysql: ports: - "${MYSQL_HOST_PORT:-3306}:6379" redis: ports: - "${REDIS_HOST_PORT:-6379}:6379"any settings for local dev tools for all php images (

application,php-fpm,php-worker)application|php-fpm|php-worker: environment: - PHP_IDE_CONFIG=${PHP_IDE_CONFIG?} cap_add: - "SYS_PTRACE" security_opt: - "seccomp=unconfined" extra_hosts: host.docker.internal:host-gateway

docker-compose.prod.yml

In this file,

we bind-mount the secret gpg key into all

phpservices (as we did in the previous tutorial for thedocker-compose.ci.ymlfile)volumes: - ${APP_CODE_PATH_HOST?}/secret.gpg:${APP_CODE_PATH_CONTAINER?}/secret.gpg:rowe provide an

envfile for allphpservicesenv_file: - ../../compose-secrets.envThe

envfile is used to pass theGPG_PASSWORDas environment variable to the containers. This is required to decrypt the secrets on container start. See section Decrypt the secrets viaENTRYPOINTfor a more in-depth explanation of the process and Thedeploy.shscript for the origin of thecompose-secrets.envfile.

Adjust the .dockerignore file

Since we have

modified the .gitignore file , we need to adjust the .dockerignore

file as well. In addition to the gitignore rules, we need three additional rules:

.git: Known from the previous tutorial. We don't need to transfer the.gitdirectory to the build context!.build/build-info: We don't need the content of the.build/directory, but we do need the thebuild-infofilevendor/**: Has to be added as a workaround for thedocker composebug Inconsistent ".dockerignore" behavior between "docker build" and "docker compose build" that causesdocker compose buildto include.gitignorefiles in thevendor/directory. This would mess with the build of thephp-baseimage as we expect that novendor/folder exists

So the full .dockerignore file becomes

# ---

# Rules from .gitignore

# ---

**/*.env*

!.env.example

!.make/variables.env

.idea

.phpunit.result.cache

vendor/

secret.gpg

.gitsecret/keys/random_seed

.gitsecret/keys/pubring.kbx~

.secrets/*/*

!**/*.secret

.build/*

!.gitkeep

gcp-service-account-key.json

# => directory-specific .gitignore files by Laravel

bootstrap/cache/*

database/*.sqlite*

storage/app/*

!storage/app/public/

storage/app/public/*

storage/framework/*

!storage/framework/cache/

storage/framework/cache/*

!storage/framework/cache/data/

storage/framework/cache/data/*

!storage/framework/sessions/

storage/framework/sessions/*

!storage/framework/testing/

storage/framework/testing/*

!storage/framework/views/

storage/framework/views/*

!storage/framework/logs/

storage/framework/logs/*

# => directory-specific .gitignore files from us

.build/*

# => keep ALL .gitignore files

!**.gitignore

# ---

# Rules specifically for .dockerignore

# ---

# => don't transfer the git directory

.git

# => keep the build-info file

!.build/build-info

# [WORKAROUND]

# temporary fix for https://github.com/docker/compose/issues/9508

# Otherwise, `docker compose build` would transfer the `.gitignore` files

# in the vendor/ directory to the build context

vendor/**

Build target: prod

For building the images for the prod environment, we stick to

the technique of the previous tutorial

once again. In short:

- initialize the

makesetup forENV=prodviabash make make-init ENVS="ENV=prod"so that all subsequentmakeinvocations useENV=prodby default, see also section Initialize the shared variables of the previous tutorial - the

ENVis passed as environment variable to thedocker composecommand as defined in the.make/02-00-docker.mkMakefile include AND the config files forprodare selectedbash ENV=prod docker compose -p -f ./.docker/docker-compose/docker-compose.local.ci.prod.yml -f ./.docker/docker-compose/docker-compose.local.prod.yml -f ./.docker/docker-compose/docker-compose.prod.yml - the

ENVenvironment variable is used to define thetargetproperty as well as theimagename in thedocker composeconfig files. This can be verified viamake docker-config, for instancetext $ make docker-config services: application: build: target: prod image: gcr.io/pl-dofroscra-p/dofroscra/application-prod:latest # ...

For ci we only needed the php-base images as well as the application image. For prod

however, we need all images.

Build stage prod in the php-base image

We will re-use a lot of the code that we used in the previous tutorial to build the php-base

image - so I'd recommend giving the corresponding section

Build stage ci in the php-base image

a look if anything is not clear.

The relevant parts of the .docker/images/php/base/Dockerfile will be explained in the

following sections but are also shown here for the sake of a better overview:

ARG ALPINE_VERSION

ARG COMPOSER_VERSION

FROM composer:${COMPOSER_VERSION} as composer

FROM alpine:${ALPINE_VERSION} as base

ARG APP_USER_NAME

ARG APP_GROUP_NAME

ARG APP_CODE_PATH

ARG TARGET_PHP_VERSION

ARG ENV

ENV APP_USER_NAME=${APP_USER_NAME}

ENV APP_GROUP_NAME=${APP_GROUP_NAME}

ENV APP_CODE_PATH=${APP_CODE_PATH}

ENV TARGET_PHP_VERSION=${TARGET_PHP_VERSION}

ENV ENV=${ENV}

RUN apk add --no-cache php8~=${TARGET_PHP_VERSION}

COPY --from=composer /usr/bin/composer /usr/local/bin/composer

WORKDIR $APP_CODE_PATH

FROM base as codebase

# By only copying the composer files required to run composer install

# the layer will be cached and only invalidated when the composer dependencies are changed

COPY ./composer.json /dependencies/

COPY ./composer.lock /dependencies/

# use a cache mount to cache the composer dependencies

# this is essentially a cache that lives in Docker BuildKit (i.e. has nothing to do with the host system)

RUN --mount=type=cache,target=/tmp/.composer \

cd /dependencies && \

# COMPOSER_HOME=/tmp/.composer sets the home directory of composer that

# also controls where composer looks for the cache

# so we don't have to download dependencies again (if they are cached)

# @see https://stackoverflow.com/a/60518444 for the correct if-then-else syntax:

# - end all commands with ; \

# - except THEN and ELSE

if [ "$ENV" == "prod" ] ; \

then \

# on production, we don't want test dependencies

COMPOSER_HOME=/tmp/.composer composer install --no-scripts --no-plugins --no-progress -o --no-dev; \

else \

COMPOSER_HOME=/tmp/.composer composer install --no-scripts --no-plugins --no-progress -o; \

fi

# copy the full codebase

COPY ../_blog /codebase

# move the dependencies

RUN mv /dependencies/vendor /codebase/vendor

# remove files we don't require in the image to keep the image size small

RUN cd /codebase && \

rm -rf .docker/ .build/ .infrastructure/ && \

if [ "$ENV" == "prod" ] ; \

then \

# on production, we don't want tests

rm -rf tests/; \

fi

# Remove all secrets that are NOT required for the given ENV:

# `find /codebase/.secrets -type f -print` lists all files in the .secrets directory

# `grep -v "/\(shared\|$ENV\)/"` matches only the files that are NOT in the shared/ or $ENV/ (e.g. prod/) directories

# `grep -v ".secret\$"` ensures that we remove all files that are NOT ending in .secret

# FYI:

# the "$" has to be escaped with a "\"

# "Escaping is possible by adding a \ before the variable"

# @see https://docs.docker.com/engine/reference/builder/#environment-replacement

# `xargs rm -f` retrieves the remaining file and deletes them

# FYI:

# `xargs` is necessary to convert the stdin to args for `rm`

# @see https://stackoverflow.com/a/20307392/413531

# the `-f` flag is required so that `rm` doesn't fail if no files are matched

RUN find /codebase/.secrets -type f -print | grep -v "/\(shared\|$ENV\)/" | xargs rm -f && \

find /codebase/.secrets -type f -print | grep -v ".secret\$" | xargs rm -f && \

# list the remaining files for debugging purposes

find /codebase/.secrets -type f -print

# We need a git repository for git-secret to work (can be an empty one)

RUN cd /codebase && \

git init

FROM base as prod

# We will use a custom ENTRYPOINT to decrypt the secrets when the container starts.

# This way, we can store the secrets in their encrypted form directly in the image.

# Note: Because we defined a custom ENTRYPOINT, the default CMD of the base image

# will be overriden. Thus, we must explicitly re-define it here via `CMD ["/bin/sh"]`.

# This behavior is described in the docs as:

# "If CMD is defined from the base image, setting ENTRYPOINT will reset CMD to an empty value. In this scenario, CMD must be defined in the current image to have a value."

# @see https://docs.docker.com/engine/reference/builder/#understand-how-cmd-and-entrypoint-interact

COPY ./.docker/images/php/base/decrypt-secrets.sh /decrypt-secrets.sh

RUN chmod +x /decrypt-secrets.sh

CMD ["/bin/sh"]

ENTRYPOINT ["/decrypt-secrets.sh"]

COPY --from=codebase --chown=$APP_USER_NAME:$APP_GROUP_NAME /codebase $APP_CODE_PATH

COPY --chown=$APP_USER_NAME:$APP_GROUP_NAME ./.build/build-info $APP_CODE_PATH/build-info

ENV based branching

So far, we have used the ENV to determine the final build stage of the docker image, by

using it as value for the target property in the docker compose config files. This

introduces a certain level of flexibility, but we would be forced to duplicate code if the

same logic is required for multiple ENV that use different build stages.

For RUN statements we can achieve branching on a more granular level by using if...else

conditions. The syntax is described in

this SO answer to "Dockerfile if else condition with external arguments":

- place a

\at the end of each line - end each command with

;

Example:

RUN if [ "$ENV" == "prod" ] ; \

then \

echo "ENV is prod"; \

else \

echo "ENV is NOT prod"; \

fi

This works, because we don't just use the ENV as the build target, but we also pass it as a

build argument in the .docker/docker-compose/docker-compose-php-base.yml file:

services:

php-base:

image: ${DOCKER_REGISTRY?}/${DOCKER_NAMESPACE?}/php-base-${ENV?}:${TAG?}

build:

args:

- ENV=${ENV?}

target: ${ENV?}

Avoid composer dev dependencies

A production image should only contain the dependencies that are necessary to run the code in production. This explicitly excludes dependencies that are only required for development or testing.

This isn't only about image size, but also about security. E.g. take a look at

CVE-2017-9841 - a remote code execution

vulnerability in phpunit. In a

blog post (german) Sebastian Bergmann

(creator of PHPUnit) mentions that

A dependency like PHPUnit, that is only required for the developing the software but not running it, is not supposed to be deployed to the production system

Thus, we will add the

--no-dev flag of the composer install command

for ENV=prod:

FROM base as codebase

# ...

RUN --mount=type=cache,target=/tmp/.composer \

cd /dependencies && \

if [ "$ENV" == "prod" ] ; \

then \

COMPOSER_HOME=/tmp/.composer composer install --no-scripts --no-plugins --no-progress -o --no-dev; \

else \

COMPOSER_HOME=/tmp/.composer composer install --no-scripts --no-plugins --no-progress -o; \

fi

Note: See also section

Build the dependencies of the

previous tutorial for an explanation of the --mount=type=cache,target=/tmp/.composer part.

Remove unnecessary directories

We already learned that no unnecessary stuff should end up in the image. This doesn't stop at

composer dependencies but does in our case also include some other directories:

.docker(the docker setup files).build(used to pass thebuild-infofile).infrastructure(see The.infrastructuredirectory)tests(the test files)

FROM base as codebase

# ...

RUN cd /codebase && \

rm -rf .docker/ .build/ .infrastructure/ && \

if [ "$ENV" == "prod" ] ; \

then \

rm -rf tests/; \

fi

Remove secrets for other environments

We have re-organized the secrets previously so that all

secrets for an environment are located in the corresponding .secrets/$ENV/ subdirectory. We can

now make use of that separation to keep only the secret files that we actually need. This

adds an additional layer of security, because everybody with a correct secret gpg key file can

decrypt all the secrets. But: If our "prod secrets" don't even exist in the "ci images"

they also cannot be leaked if ci is compromised.

FROM base as codebase

# ...

RUN find /codebase/.secrets -type f -print | grep -v "/\(shared\|$ENV\)/" | xargs rm -f && \

find /codebase/.secrets -type f -print | grep -v ".secret\$" | xargs rm -f && \

# list the remaining files for debugging purposes

find /codebase/.secrets -type f -print

Notes:

find /codebase/.secrets -type f -printlists all files in the .secrets directorygrep -v "/\(shared\|$ENV\)/"matches only the files that are NOT in theshared/or$ENV/(e.g.prod/) directoriesgrep -v ".secret\$"ensures that we remove all files that are NOT ending in.secret- as per documentation,

the

$has to be escaped with a\> "Escaping is possible by adding a \ before the variable"

- as per documentation,

the

xargs rm -fretrieves the remaining file and deletes themxargsis necessary to convert thestdinto arguments forrm- the

-fflag is required so thatrmdoesn't fail if no files are matched

Decrypt the secrets via ENTRYPOINT

In the CI pipeline setup we decrypt the secret files in a manual step after the containers have been started, see Run details:

[...] then, the docker setup is started [...] and gpg is initialized so that the secrets can be decrypted

make gpg-init make secret-decrypt-with-password

It "works" as long as no secrets are required when the container starts. This is unfortunately

no longer the case, because the php-worker container will start its workers immediately, and

they require a valid .env file - but

the .env file for prod (app.env) is only stored encrypted in the image.

Thus, we must ensure that the secrets are decrypted as soon as the container starts -

preferably before any other command is run. This sounds like

the perfect job for ENTRYPOINT:

the ENTRYPOINT is executed every time we run a container. Some things can't be done during build but only at runtime [...] - ENTRYPOINT is a good solution for that problem

In our case, the ENTRYPOINT should

- initialize

gpg(i.e. "read the secret gpg key") viabash make gpg-init - decrypt the secrets via

bash make secret-decrypt - move/copy the decrypted files if necessary

bash cp .secrets/prod/app.env .env

and I have created a corresponding script at .docker/images/php/base/decrypt-secrets.sh:

#!/usr/bin/env bash

# exit immediately on error

set -e

# initialize make

make make-init ENVS="ENV=prod GPG_PASSWORD=$GPG_PASSWORD"

# read the secret gpg key

make gpg-init

# Only decrypt files required for production

files=$(make secret-list | grep "/\(shared\|prod\)/" | tr '\n' ' ')

make secret-decrypt-with-password FILES="$files"

cp .secrets/prod/app.env .env

# treat this script as a "decorator" and execute any other command after running it

# @see https://www.pascallandau.com/blog/structuring-the-docker-setup-for-php-projects/#using-entrypoint-for-pre-run-configuration

exec "$@"

Notes:

make make-init ENVS="ENV=prod GPG_PASSWORD=$GPG_PASSWORD"initializesmakeand requires that an environment variable named$GPG_PASSWORDexists- this is ensured via the

env_fileproperty indocker-compose.prod.yml

- this is ensured via the

make gpg-initrequires that a secretgpgkey exists at./secret.gpg(see Localgit-secretandgpgsetup)- this is ensured via the bind mount in

docker-compose.prod.yml

- this is ensured via the bind mount in

files=$(make secret-list | grep "/\(shared\|prod\)/" | tr '\n' ' ')retrieves all secret files that are relevant for productionmake secret-listwill list all secret filesgrep "/\(shared\|prod\)/"reduces the list to only the ones in the.secrets/shared/and.secrets/prod/directoriestr '\n' ' 'replaces new lines with spaces in the result so that the$filesvariable can be passed savely as an argument to the next command

via

make secret-decrypt-with-password FILES="$files"- we make sure to only decrypt files that are relevant for production. This is important,

because the

prodimage only contains production secrets. Secrets for any other environment will not be part of the image. If we wouldn't provide a dedicated list of files,git-secretwould attempt to decrypt all secrets that it knows and would fail if an encrypted file is missing with the error

gpg: can't open '/missing-file.secret': No such file or directory gpg: decrypt_message failed: No such file or directory git-secret: abort: problem decrypting file with gpg: exit code 2: /missing-file- in addition, the

secret-decrypt-with-passwordtarget expects that theGPG_PASSWORDvariable is populated (see first point).

- we make sure to only decrypt files that are relevant for production. This is important,

because the

- the last line

exec "$@"ensures that everything "works as before", i.e. the sameENTRYPOINT/CMDis used in the containers (e.g.php-fpmwill still invoke thephp-fpmprocess once the secrets have been decrypted)

The final code in the Dockerfile looks like this:

FROM base as prod

COPY ./.docker/images/php/base/decrypt-secrets.sh /decrypt-secrets.sh

RUN chmod +x /decrypt-secrets.sh

CMD ["/bin/sh"]

ENTRYPOINT ["/decrypt-secrets.sh"]

Note: Because we defined a custom ENTRYPOINT, the default CMD of the base image will be

overridden. Thus, we must explicitly re-define it here via CMD ["/bin/sh"]. This behavior is also

described in the docs:

If CMD is defined from the base image, setting

ENTRYPOINTwill resetCMDto an empty value. In this scenario,CMDmust be defined in the current image to have a value.

Copy codebase and build-info file

As before, we will

copy the final "build artifact" from the codebase build stage

to only retain a single layer in the image. In addition, we also copy

the build-info file from ./.build/build-info to the root of the

codebase.

FROM base as prod

COPY --from=codebase --chown=$APP_USER_NAME:$APP_GROUP_NAME /codebase $APP_CODE_PATH

COPY --chown=$APP_USER_NAME:$APP_GROUP_NAME ./.build/build-info $APP_CODE_PATH/build-info

Build stage prod in the remaining images

In the remaining images for nginx, php-fpm, php-worker and application, there are no

dedicated instructions for the prod target. We must still define the build stage, though, via

FROM base as prod

Otherwise, the build would fail with the error

failed to solve: rpc error: code = Unknown desc = failed to solve with frontend dockerfile.v0: failed to create LLB definition: target stage prod could not be found

Makefile changes

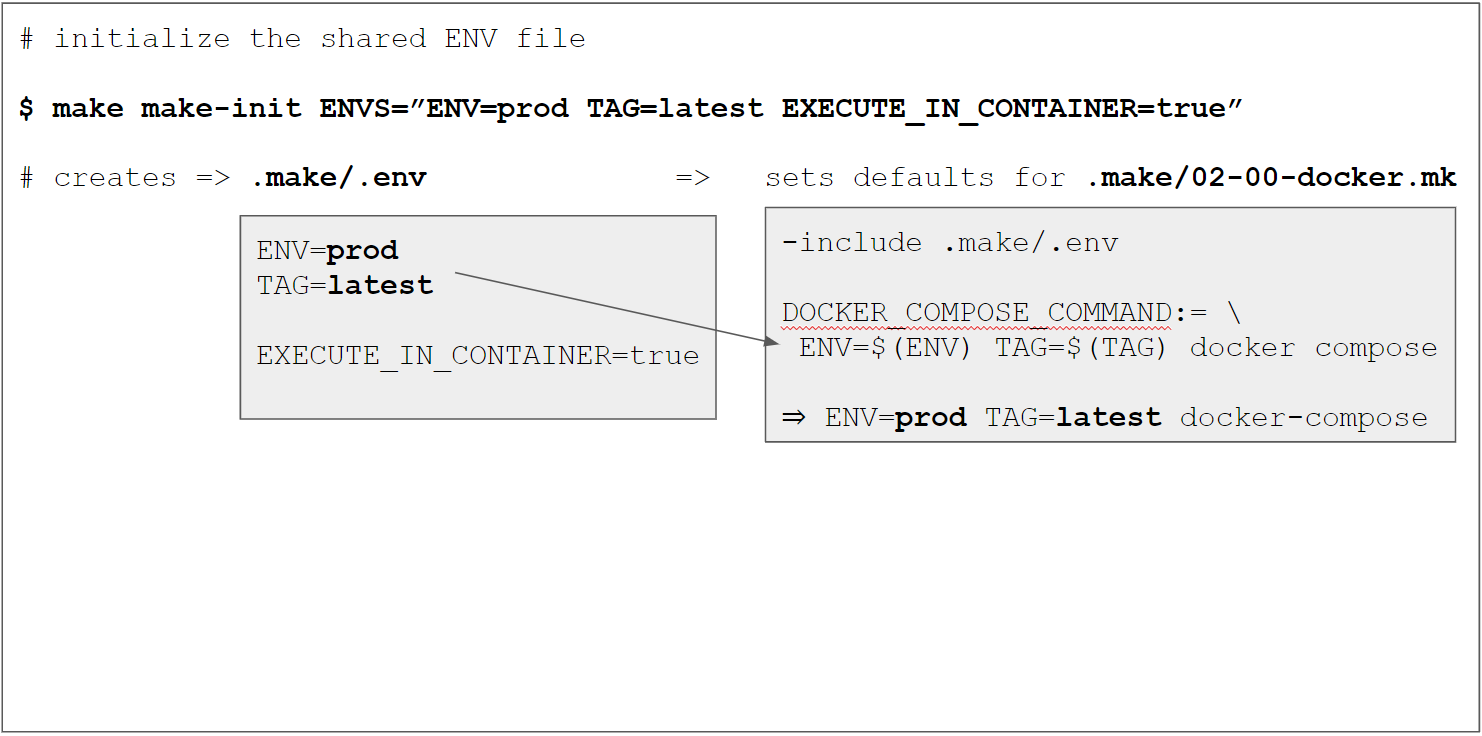

I have updated the default help target that prints all available commands to also include some

information about the current environment (usually set e.g. via make make-init ENVS="ENV=prod").

The full recipe is

help:

@printf '%-43s \033[1mDefault values: \033[0m \n'

@printf '%-43s ===================================\n'

@printf '%-43s ENV: \033[31m "$(ENV)" \033[0m \n'

@printf '%-43s TAG: \033[31m "$(TAG)" \033[0m \n'

@printf '%-43s ===================================\n'

@printf '%-43s \033[3mRun the following command to set them:\033[0m\n'

@printf '%-43s \033[1mmake make-init ENVS="ENV=prod TAG=latest"\033[0m\n'

@awk 'BEGIN {FS = ":.*##"; printf "\n\033[1mUsage:\033[0m\n make \033[36m<target>\033[0m\n"} /^[a-zA-Z0-9_-]+:.*?##/ { printf " \033[36m%-40s\033[0m %s\n", $$1, $$2 } /^##@/ { printf "\n\033[1m%s\033[0m\n", substr($$0, 5) } ' Makefile .make/*.mk

and will now print a header showing the values of the $(ENV) and $(TAG) variables:

$ make

Default values:

===================================

ENV: "local"

TAG: "latest"

===================================

Run the following command to set them:

make make-init ENVS="ENV=prod TAG=latest"

Usage:

make <target>

[Make]

make-init Initializes the local .makefile/.env file with ENV variables for make. Use via ENVS="KEY_1=value1 KEY_2=value2"

[Application: Setup]

Adding GCP values to .make/variables.env

The .make/variables.env file contains the "default" shared variables, that are neither "secret"

nor likely to be changed (see Initialize the shared variables).

Those variables include the "ingredients" for the image naming convention

$(DOCKER_REGISTRY)/$(DOCKER_NAMESPACE)/$(DOCKER_SERVICE_NAME)-$(ENV)

Since we will now

use our own registry, we

need to change the DOCKER_REGISTRY value from docker.io to gcr.io/pl-dofroscra-p (see section

Pushing images to the registry).

In addition, we will need three more GCP specific variables that are required for the new

gcloud cli make targets:

GCP_PROJECT_ID: The GCP project idGCP_ZONE: The availability zone of the GCP VMGCP_VM_NAME: The name of the GCP VM

So the full content of .make/variables.env becomes

DOCKER_REGISTRY=gcr.io/pl-dofroscra-p

DOCKER_NAMESPACE=dofroscra

APP_USER_NAME=application

APP_GROUP_NAME=application

GCP_PROJECT_ID=pl-dofroscra-p

GCP_ZONE=us-central1-a

GCP_VM_NAME=dofroscra-test

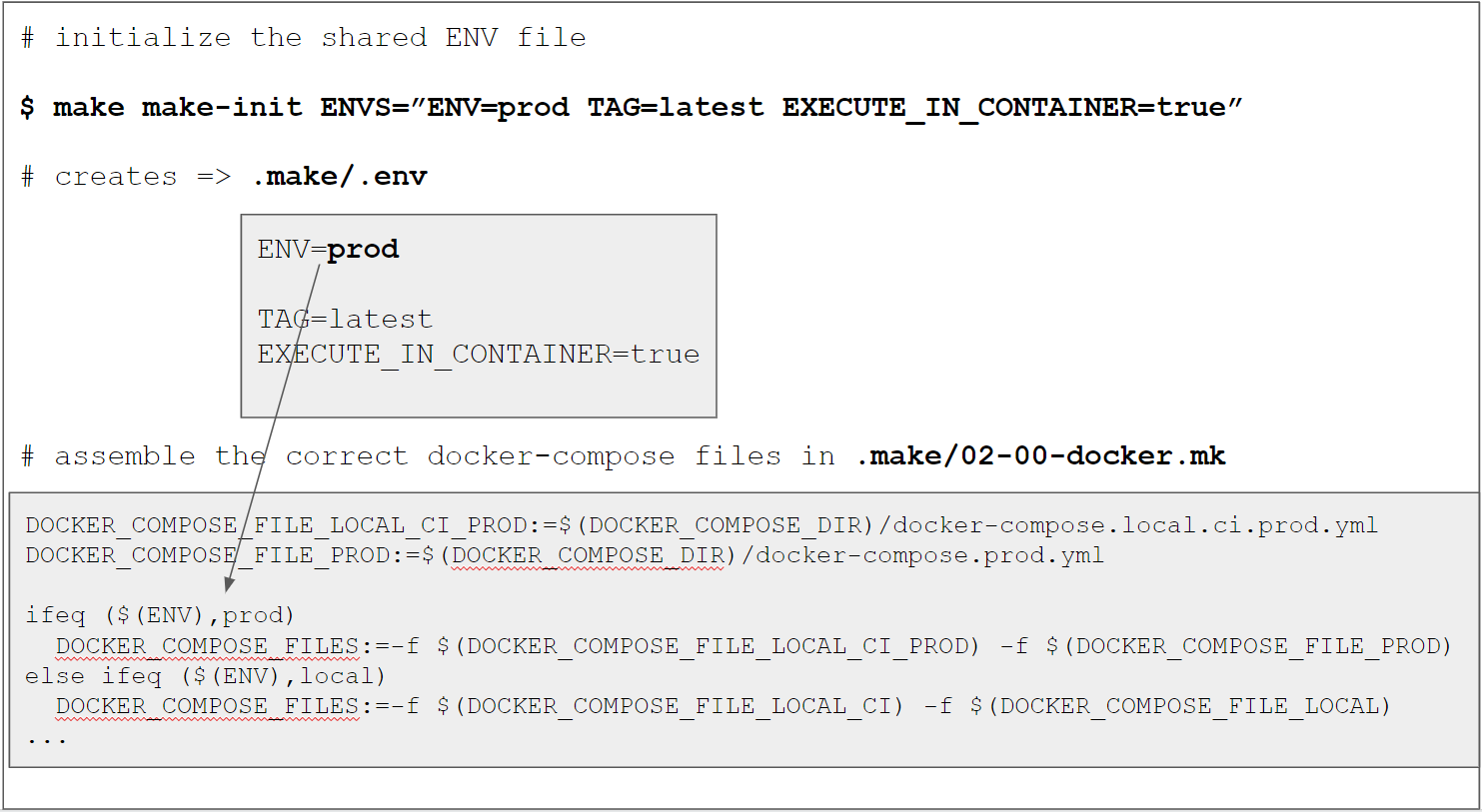

ENV based docker compose config

We use the same technique as described

in the previous tutorial to assemble the docker compose config files

by adding the config files for ENV=prod and the corresponding DOCKER_COMPOSE_FILES

definition in .make/02-00-docker.mk:

# File .make/02-00-docker.mk

# ...

DOCKER_COMPOSE_DIR:=...

DOCKER_COMPOSE_COMMAND:=...

DOCKER_COMPOSE_FILE_LOCAL_CI_PROD:=$(DOCKER_COMPOSE_DIR)/docker-compose.local.ci.prod.yml

DOCKER_COMPOSE_FILE_LOCAL_PROD:=$(DOCKER_COMPOSE_DIR)/docker-compose.local.prod.yml

DOCKER_COMPOSE_FILE_LOCAL:=$(DOCKER_COMPOSE_DIR)/docker-compose.local.yml

DOCKER_COMPOSE_FILE_CI:=$(DOCKER_COMPOSE_DIR)/docker-compose.ci.yml

DOCKER_COMPOSE_FILE_PROD:=$(DOCKER_COMPOSE_DIR)/docker-compose.prod.yml

ifeq ($(ENV),prod)

DOCKER_COMPOSE_FILES:=-f $(DOCKER_COMPOSE_FILE_LOCAL_CI_PROD) -f $(DOCKER_COMPOSE_FILE_LOCAL_PROD) -f $(DOCKER_COMPOSE_FILE_PROD)

else ifeq ($(ENV),ci)

DOCKER_COMPOSE_FILES:=-f $(DOCKER_COMPOSE_FILE_LOCAL_CI_PROD) -f $(DOCKER_COMPOSE_FILE_CI)

else ifeq ($(ENV),local)

DOCKER_COMPOSE_FILES:=-f $(DOCKER_COMPOSE_FILE_LOCAL_CI_PROD) -f $(DOCKER_COMPOSE_FILE_LOCAL_PROD) -f $(DOCKER_COMPOSE_FILE_LOCAL)

endif

DOCKER_COMPOSE:=$(DOCKER_COMPOSE_COMMAND) $(DOCKER_COMPOSE_FILES)

FYI: There is no dedicated docker compose config file for settings that only affect ci and

prod (i.e. docker-compose.ci.prod.yml).

The "final" $(DOCKER_COMPOSE_FILES) variable will look like this:

-f ./.docker/docker-compose/docker-compose.local.ci.prod.yml -f ./.docker/docker-compose/docker-compose.local.prod.yml -f ./.docker/docker-compose/docker-compose.prod.yml

and the "full" $(DOCKER_COMPOSE) variable like this:

ENV=prod TAG=latest DOCKER_REGISTRY=gcr.io/pl-dofroscra-p DOCKER_NAMESPACE=dofroscra APP_USER_NAME=application APP_GROUP_NAME=application docker compose -p dofroscra_prod --env-file ./.docker/.env -f ./.docker/docker-compose/docker-compose.local.ci.prod.yml -f ./.docker/docker-compose/docker-compose.local.prod.yml -f ./.docker/docker-compose/docker-compose.prod.yml

Changes to the git-secret recipes

The git-secret recipes are defined in 01-00-application-setup.mk and have been added

originally in

Use git secret to encrypt secrets in the repository: Makefile adjustments.

I have modified the targets secret-decrypt and secret-decrypt-with-password to accept an

optional list of files to decrypt via the FILES variable. If the variable is empty, all

files are decrypted. This is required for the

decrypt-secrets.sh script, because we will

only store the secrets that are relevant for the curently built environment

in the image and the decryption would fail if we attempted to decrypt files that don't exist.

# ...

.PHONY: secret-decrypt

secret-decrypt: ## Decrypt secret files via `git-secret reveal -f`. Use FILES=file1 to decrypt only file1 instead of all files

"$(MAKE)" -s git-secret ARGS="reveal -f $(FILES)"

.PHONY: secret-decrypt-with-password

secret-decrypt-with-password: ## Decrypt secret files using a password for gpg. Use FILES=file1 to decrypt only file1 instead of all files

@$(if $(GPG_PASSWORD),,$(error GPG_PASSWORD is undefined))

"$(MAKE)" -s git-secret ARGS="reveal -f -p $(GPG_PASSWORD) $(FILES)"

In addition, I made a minor adjustment to the targets secret-add, secret-cat and

secret-remove to use the variable name FILES (plural) instead of FILE, because all of

them can also work with a list of files instead of just a single one.

Additional docker recipes

The following targets have been added to .make/02-00-docker.mk

compose-secrets.env:

@echo "# This file only exists because docker compose cannot run `build` otherwise," > ./compose-secrets.env

@echo "# because it is referenced as an `env_file` in the docker compose config file" > ./compose-secrets.env

@echo "# for the `prod` environment. On `prod` it will contain the necessary ENV variables," > ./compose-secrets.env

@echo "# but on all other environments this 'placeholder' file is created." > ./compose-secrets.env

@echo "# The file is generated automatically via `make` if a docker compose target is executed" > ./compose-secrets.env

@echo "# @see https://github.com/docker/compose/issues/1973#issuecomment-1148257736" > ./compose-secrets.env

.PHONY: docker-push

docker-push: validate-docker-variables ## Push all docker images to the remote repository

$(DOCKER_COMPOSE) push $(ARGS)

.PHONY: docker-pull

docker-pull: validate-docker-variables ## Pull all docker images from the remote repository

$(DOCKER_COMPOSE) pull $(ARGS)

.PHONY: docker-exec

docker-exec: validate-docker-variables ## Execute a command in a docker container. Usage: `make docker-exec DOCKER_SERVICE_NAME="application" DOCKER_COMMAND="echo 'Hello world!'"`

@$(if $(DOCKER_SERVICE_NAME),,$(error "DOCKER_SERVICE_NAME is undefined"))

@$(if $(DOCKER_COMMAND),,$(error "DOCKER_COMMAND is undefined"))

$(DOCKER_COMPOSE) exec -T $(DOCKER_SERVICE_NAME) $(DOCKER_COMMAND)

# @see https://www.linuxfixes.com/2022/01/solved-how-to-test-dockerignore-file.html

# helpful to debug a .dockerignore file

.PHONY: docker-show-build-context

docker-show-build-context: ## Show all files that are in the docker build context for `docker build`

@echo -e "FROM busybox\nCOPY . /codebase\nCMD find /codebase -print" | docker image build --no-cache -t build-context -f - .

@docker run --rm build-context | sort

# `docker build` and `docker compose build` are behaving differently

# @see https://github.com/docker/compose/issues/9508

.PHONY: docker-show-compose-build-context

docker-show-compose-build-context: ## Show all files that are in the docker build context for `docker compose build`

@.dev/scripts/docker-compose-build-context/show-build-context.sh

# Note: This is only a temporary target until https://github.com/docker/for-win/issues/12742 is fixed

.PHONY: docker-fix-mount-permissions

docker-fix-mount-permissions: ## Fix the permissions of the bind-mounted folder, @see https://github.com/docker/for-win/issues/12742

$(EXECUTE_IN_APPLICATION_CONTAINER) ls -al

compose-secrets.envensures that the file./compose-secrets.envexists in the root of the repository. The target is added as precondition to thevalidate-docker-variablestarget so that it is checked whenever anotherdocker composetarget is run.PHONY: validate-docker-variables validate-docker-variables: .docker/.env compose-secrets.envWithout this file,

docker compose buildwould fail for theprodenvironment, because the file only exists "on the VM" and is defined asenv_filesetting indocker-compose.prod.yml. This is one of those annoying cases when the dual-build-and-run-usage ofdocker composegets in the way, see also GH issue "Allow some commands to run without full config validation"docker-pushusesdocker compose pushto push all defined services to the registrydocker-pullusesdocker compose pullto pull all defined services from the registrydocker-execusesdocker compose execto run arbitrary commands in the docker container that is specified via the$(DOCKER_SERVICE_NAME)variable. The command itself has to be passed via the$(DOCKER_COMMAND)variabledocker-show-build-contextis a small helper script that builds a temporary image and lists all files in the build context - this is very helpful to debug entries in the.dockerignorefile. See also: [SOLVED] How to test dockerignore file?docker-show-compose-build-contextdoes the same but fordocker compose build- which currently (indocker composev2.5.1) seems to behave differently thandocker builddocker-fix-mount-permissionsis a only a temporary target that provides a workaround for a bug I filed in the Docker Desktop for Windows repository: Ownership of files set via bind mount is set to user who accesses the file first

GCP recipes

For the deployment, we need to communicate with the VM and will use

the gcloud cli to run

SSH commands via IAP tunneling. The cli

requires a couple of default parameters like the VM name, the project id, the availability

zone and the location of the key file for the service account, that we

conveniently defined in the .make/variables.env file.

The GCP targets are defined in .make/03-00-gcp.mk:

##@ [GCP]

.PHONY: gcp-init

gcp-init: validate-gcp-variables ## Initialize the `gcloud` cli and authenticate docker with the keyfile defined via GCP_DEPLOYMENT_SERVICE_ACCOUNT_KEY.

@$(if $(GCP_DEPLOYMENT_SERVICE_ACCOUNT_KEY),,$(error "GCP_DEPLOYMENT_SERVICE_ACCOUNT_KEY is undefined"))

@$(if $(GCP_PROJECT_ID),,$(error "GCP_PROJECT_ID is undefined"))

gcloud auth activate-service-account --key-file="$(GCP_DEPLOYMENT_SERVICE_ACCOUNT_KEY)" --project="$(GCP_PROJECT_ID)"

cat "$(GCP_DEPLOYMENT_SERVICE_ACCOUNT_KEY)" | docker login -u _json_key --password-stdin https://gcr.io

.PHONY: validate-gcp-variables

validate-gcp-variables:

@$(if $(GCP_PROJECT_ID),,$(error "GCP_PROJECT_ID is undefined"))

@$(if $(GCP_ZONE),,$(error "GCP_ZONE is undefined"))

@$(if $(GCP_VM_NAME),,$(error "GCP_VM_NAME is undefined"))

# @see https://cloud.google.com/sdk/gcloud/reference/compute/ssh

.PHONY: gcp-ssh-command

gcp-ssh-command: validate-gcp-variables ## Run an arbitrary SSH command on the VM via IAP tunnel. Usage: `make gcp-ssh-command COMMAND="whoami"`

@$(if $(COMMAND),,$(error "COMMAND is undefined"))

gcloud compute ssh $(GCP_VM_NAME) --project $(GCP_PROJECT_ID) --zone $(GCP_ZONE) --tunnel-through-iap --command="$(COMMAND)"

.PHONY: gcp-ssh-login

gcp-ssh-login: validate-gcp-variables ## Log into a VM via IAP tunnel

gcloud compute ssh $(GCP_VM_NAME) --project $(GCP_PROJECT_ID) --zone $(GCP_ZONE) --tunnel-through-iap

# @see https://cloud.google.com/sdk/gcloud/reference/compute/scp

.PHONY: gcp-scp-command

gcp-scp-command: validate-gcp-variables ## Copy a file via scp to the VM via IAP tunnel. Usage: `make gcp-scp-command SOURCE="foo" DESTINATION="bar"`

@$(if $(SOURCE),,$(error "SOURCE is undefined"))

@$(if $(DESTINATION),,$(error "DESTINATION is undefined"))

gcloud compute scp $(SOURCE) $(GCP_VM_NAME):$(DESTINATION) --project $(GCP_PROJECT_ID) --zone $(GCP_ZONE) --tunnel-through-iap

# Defines the default secret version to retrieve from the Secret Manager

SECRET_VERSION?=latest

# @see https://cloud.google.com/sdk/gcloud/reference/secrets/versions/access

.PHONY: gcp-secret-get

gcp-secret-get: ## Retrieve and print the secret $(SECRET_NAME) in version $(SECRET_VERSION) from the Secret Manager

@$(if $(SECRET_NAME),,$(error "SECRET_NAME is undefined"))

@$(if $(SECRET_VERSION),,$(error "SECRET_VERSION is undefined"))

@gcloud secrets versions access $(SECRET_VERSION) --secret=$(SECRET_NAME)

.PHONY: gcp-docker-exec

gcp-docker-exec: ## Run a command in a docker container on the VM. Usage: `make gcp-docker-exec DOCKER_SERVICE_NAME="application" DOCKER_COMMAND="echo 'Hello world!'"`

@$(if $(DOCKER_SERVICE_NAME),,$(error "DOCKER_SERVICE_NAME is undefined"))

@$(if $(DOCKER_COMMAND),,$(error "DOCKER_COMMAND is undefined"))

"$(MAKE)" -s gcp-ssh-command COMMAND="cd $(CODEBASE_DIRECTORY) && make docker-exec DOCKER_SERVICE_NAME='$(DOCKER_SERVICE_NAME)' DOCKER_COMMAND='$(DOCKER_COMMAND)'"

# @see https://cloud.google.com/compute/docs/instances/view-ip-address

.PHONY: gcp-show-ip

gcp-show-ip: ## Show the IP address of the VM specified by GCP_VM_NAME.

gcloud compute instances describe $(GCP_VM_NAME) --zone $(GCP_ZONE) --project=$(GCP_PROJECT_ID) --format='get(networkInterfaces[0].accessConfigs[0].natIP)'

gcp-initensures that the correct service account is activated for thegcloudcli and is also used fordockerauthenticationvalidate-gcp-variablesis the pendant to thevalidate-docker-variablesin the.make/02-00-docker.mkfile and checks if the default variablesGCP_PROJECT_ID,GCP_ZONEandGCP_VM_NAMEexist. See also section Enforce required parametersgcp-ssh-commandis used to run arbitrary SSH commands on the VM usinggcloud compute sshThe command is defined via theCOMMANDvariablegcp-ssh-loginis a convenience target to log into the GCP VM via SSH using IAP tunnelinggcp-scp-commandcopies files from the local system to the VM viascpusinggcloud compute scpThe source must be defined via theSOURCEvariable and the destination via theDESTINATIONvariablegcp-secret-getretrieves a secret from the Secret Manager. The secret has to specified via theSECRET_NAMEvariable and an optional version can be given via theVERSIONvariable (that defaults tolatestif omitted)gcp-docker-execruns thegcp-ssh-commandtarget to execute thedocker-exectarget on the VMgcp-show-ipretrieves the ip address of the VM on GCP as explained in

Infrastructure recipes

For the infrastructure, we currently only have a single target defined in the

.make/04-00-infrastructure.mk file

##@ [Infrastructure]

.PHONY: infrastructure-setup-vm

infrastructure-setup-vm: ## Set the GCP VM up

bash .infrastructure/setup-gcp.sh

infrastructure-setup-vmruns the script defined in the.infrastructure/setup-gcp.sh

Deployment recipes

The Deployment workflow is described in more detail in the following

section, thus I'll only show the corresponding targets defined in .make/05-00-deployment.mk

here

##@ [Deployment]

.PHONY: deploy

deploy: # Build all images and deploy them to GCP

@printf "$(GREEN)Switching to 'local' environment$(NO_COLOR)\n"

@make --no-print-directory make-init

@printf "$(GREEN)Starting docker setup locally$(NO_COLOR)\n"

@make --no-print-directory docker-up

@printf "$(GREEN)Verifying that there are no changes in the secrets$(NO_COLOR)\n"

@make --no-print-directory gpg-init

@make --no-print-directory deployment-guard-secret-changes

@printf "$(GREEN)Verifying that there are no uncommitted changes in the codebase$(NO_COLOR)\n"

@make --no-print-directory deployment-guard-uncommitted-changes

@printf "$(GREEN)Initializing gcloud$(NO_COLOR)\n"

@make --no-print-directory gcp-init

@printf "$(GREEN)Switching to 'prod' environment$(NO_COLOR)\n"

@make --no-print-directory make-init ENVS="ENV=prod TAG=latest"

@printf "$(GREEN)Creating build information file$(NO_COLOR)\n"

@make --no-print-directory deployment-create-build-info-file

@printf "$(GREEN)Building docker images$(NO_COLOR)\n"

@make --no-print-directory docker-build

@printf "$(GREEN)Pushing images to the registry$(NO_COLOR)\n"

@make --no-print-directory docker-push

@printf "$(GREEN)Creating the deployment archive$(NO_COLOR)\n"

@make deployment-create-tar

@printf "$(GREEN)Copying the deployment archive to the VM and run the deployment$(NO_COLOR)\n"

@make --no-print-directory deployment-run-on-vm

@printf "$(GREEN)Clearing deployment archive$(NO_COLOR)\n"

@make --no-print-directory deployment-clear-tar

@printf "$(GREEN)Switching to 'local' environment$(NO_COLOR)\n"

@make --no-print-directory make-init

# directory on the VM that will contain the files to start the docker setup

CODEBASE_DIRECTORY=/tmp/codebase

IGNORE_SECRET_CHANGES?=

.PHONY: deployment-guard-secret-changes

deployment-guard-secret-changes: ## Check if there are any changes between the decrypted and encrypted secret files

if ( ! make secret-diff || [ "$$(make secret-diff | grep ^@@)" != "" ] ) && [ "$(IGNORE_SECRET_CHANGES)" == "" ] ; then \

printf "Found changes in the secret files => $(RED)ABORTING$(NO_COLOR)\n\n"; \

printf "Use with IGNORE_SECRET_CHANGES=true to ignore this warning\n\n"; \

make secret-diff; \

exit 1; \

fi

@echo "No changes in the secret files!"

IGNORE_UNCOMMITTED_CHANGES?=

.PHONY: deployment-guard-uncommitted-changes

deployment-guard-uncommitted-changes: ## Check if there are any git changes and abort if so. The check can be ignore by passing `IGNORE_UNCOMMITTED_CHANGES=true`

if [ "$$(git status -s)" != "" ] && [ "$(IGNORE_UNCOMMITTED_CHANGES)" == "" ] ; then \

printf "Found uncommitted changes in git => $(RED)ABORTING$(NO_COLOR)\n\n"; \

printf "Use with IGNORE_UNCOMMITTED_CHANGES=true to ignore this warning\n\n"; \

git status -s; \

exit 1; \

fi

@echo "No uncommitted changes found!"

# FYI: make converts all new lines in spaces when they are echo'd

# @see https://stackoverflow.com/a/54068252/413531

# To execute a shell command via $(command), the $ has to be escaped with another $

# ==> $$(command)

# @see https://stackoverflow.com/a/26564874/413531

.PHONY: deployment-create-build-info-file

deployment-create-build-info-file: ## Create a file containing version information about the codebase

@echo "BUILD INFO" > ".build/build-info"

@echo "==========" >> ".build/build-info"

@echo "User :" $$(whoami) >> ".build/build-info"

@echo "Date :" $$(date --rfc-3339=seconds) >> ".build/build-info"